Migiel de Vos

Migiel de Vos werkt als teamhoofd Network Development bij SURF aan… Meer over Migiel de Vos

During SC16 (Super Computing 2016), SURF presented a demo to show what we can already do with network function virtualisation. SC is the largest international conference for high performance computing, networking, storage and analysis. Together with the University of Amsterdam, the University of Groningen and the Netherlands eScience Center, we showed the SC16 visitors what we can do in the Netherlands.

As at SC2015, the demo this year focused on network function virtualisation (NFV) in combination with service function chaining (SFC) through a programmable network. A new feature this year is that we used the Network Service Header (NSH) protocol, which is a standard currently being developed by the Internet Engineering Task Force (IETF) to enable service function chaining (SFC). This protocol makes it easier to chain various functions within a network without requiring the end application to be aware of this. As it is difficult to visualise network functions such as SFC and NSH in a demo, we used a virtual reality (VR) environment to demonstrate them.

The Internet Engineering Task Force is currently working on a protocol called Network Service Header (NSH). NSH is a relatively new protocol for identifying service function paths in the network by giving each network package an additional header. This header is retained during transport of the package through the service chain and contains information about the path to be followed and optional metadata. In this way the protocol decouples the service topology from the actual network topology.

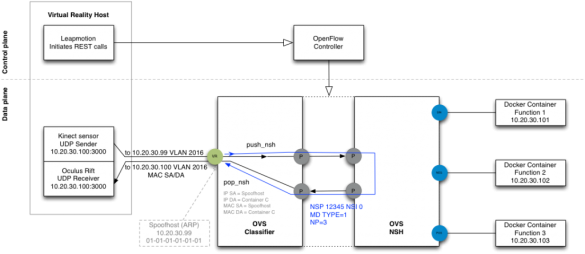

Visitors were invited to put on an Oculus Rift VR headset to enter the VR world. The VR headset presented a 3D image of the VR headset user via Microsoft Kinect. The accompanying Microsoft Kinect sensor sent depth data to the programmable network, which had a data stream of about 300 Mbit/s. The headset had a Leap Motion sensor that enabled the headset user to open a menu with a hand gesture. They could then choose between various network functions. The selected functions placed API calls to the corresponding network controller in order to program the network. The available operations that could be used as network functions were inversion, bumps, and various sinusoidal functions. The result was real-time modification of the user’s 3D image. If no VR function was selected, the data stream went through the programmable network directly back to the headset for presentation in the user’s VR environment. In that case, the result was an unmodified 3D image.

With NFV, the functions conventionally performed by specialised network equipment are migrated to virtual environments, so they can be used in a flexible and scalable manner. Examples of these network functions include rate limiting, firewalling, intrusion detection, on-the-fly encoding and decoding, and so on.

A programmable network is required to enable fully transparent and real-time application of network functions in order to active data streams. That enables network functions to added or removed at any time and in any desired sequence. This allows network traffic to be controlled via several functions, thereby creating a chain of network functions. A specific protocol for this, namely a Network Service Header (NSH), is being developed by the Internet Engineering Task Force. This protocol makes it easier to chain various functions within a network without requiring the end application to be aware of this.

For this demo we used a virtual OpenFlow switch running on a blade with an Intel E5-1270V5 CPU, 4x16G memory and a Mellanox MCX414A-BCAT network interface. The virtual switch software ran on Centos7 and consisted of Open vSwitch 2.5.90 with multiple patches to support NHS functionality. This virtual switch was connected over a 10GE link to an OpenFlow capable Pica8 P-5101. Both blades were driven by our controller using the OpenFlow protocol.

For the demo we used several machines equipped with Centos7 and Docker 1.13. They were connected directly to the programmable network by 10 Gbps Ethernet links. This made it possible to run and select various Docker containers with VR functions on each machine. The various machines are comparable to public or private cloud platforms on which virtual machines (VMs) with applications can be run. The difference compared to the demo in 2015 is that we used containers instead of virtual machines. When used in combination with network functions, this constitutes an interesting research area because containers can be run much faster and in a more flexible manner than VMs.

Each Docker container is activated using its own VR function, which can manipulate the depth data from the Kinect sensor. The programmable network allows the data stream from the Kinect sensor to be sent to the right containers (functions) based on the Network Service Header.

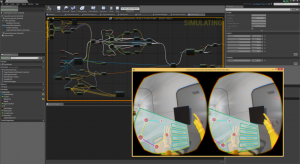

A virtual reality environment is created using the Unreal Engine 4 gaming engine. The Unreal Engine provides effective support for various VR head-mounted displays such as the Oculus Rift. The Unreal Engine also comes with the right tools to link all technologies and hardware components in this demo. The Unreal Engine and the Kinect sensor’s 3D depth data are used to create a 3D reconstruction of the person wearing the VR headset. The Unreal Engine then sends this 3D depth data to a network of network functions that process the data. After the depth data is edited, it is returned to the Unreal Engine, where a 3D representation is then created (see image below).

The user is free to decide which network functions they enable and therefore the manner in which the 3D depth data is processed, i.e. inversion, wave and/or animated wave processing. The user does so via the user interface in the virtual world. The Leap Motion is used to show the user interface and enable the user to interact with it. Together with the Leap Motion, the Unreal Engine can facilitate 3D tracking and insert a representation of the user’s hands into the virtual world (see image below). This enables the user to intuitively interact with the virtual world.

The VR functions were programmed to be NSH-aware, making it possible to send context if appropriate and available. Furthermore, the functions knew which active path the stream in question belonged to, thereby allowing the right modifications to be performed on the right content. After all the modifications had been performed, the programmable network knew when the data should be returned to the VR headset for display to the visitor. This was accomplished by maintaining a sequential index called the Network Service Index (NSI).

SURF demo team: Casper van Leeuwen, Hans Trompert, Gerben van Malenstein and Migiel de Vos

authors: Michiel de Vos and Casper van Leeuwen

Migiel de Vos werkt als teamhoofd Network Development bij SURF aan… Meer over Migiel de Vos

0 Praat mee