Joris Mollinga

High Performance Machine Learning consultant bij SURF in Amsterdam Meer over Joris Mollinga

Deep Learning (DL) is becoming more and more popular. Many of the libraries and toolkits used by deep learning engineers for designing, building and training neural networks are open source, such as PyTorch or Tensorflow. These frameworks have contributed to the popularity of deep learning, and make it available for everyone to use, from hobbyists to academic researchers to private companies.

This “democratization” of AI toolkits is considered good. However, for truly getting the most out of any dataset or code, one requires the use of Graphics Processor Unit(s) (GPUs) for accelerating the matrix multiplications. GPUs accelerate the matrix multiplications needed for training deep learning models. To implement this in practise, both PyTorch and Tensorflow rely on CUDA. CUDA is a programming language which facilitates accelerated computing on GPUs. Because the CUDA driver is closed-source, the scientific community can not make contributions to it or use it on other hardware than NVIDIAs. As a result, it is impossible to run machine learning tasks (both training and inference) on GPUs produced by the other major GPU manufacturer: AMD.

Until PyTorch 1.8 was released. Although still in beta, it adds a very important new feature: out of the box support on ROCm, AMDs alternative to CUDA. In the past this was possible by installing docker containers which have custom built support for ROCm with PyTorch. However, for the average user this was too much of an investment and in my experience, it often did not work or did not pass the unit tests. Furthermore, the community of PyTorch with AMD GPU users was very small, making it difficult to get the necessary support for this.

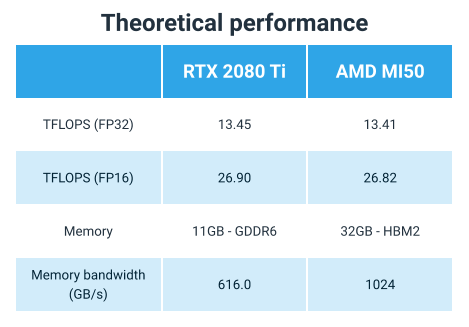

Now that this has been solved with the support of ROCm in PyTorch 1.8, it is interesting to compare the performance of both GPU backends. In this blog post we dive deeper into a number of image classification models, and measure the training speed on both AMD and NVIDIA GPUs. In order to make a fair comparison, we compare RTX 2080Ti GPUs with AMD MI50 GPUs, which should have nearly identical performance in terms of FLOPS. The MI50 has more memory and a higher memory bandwidth.

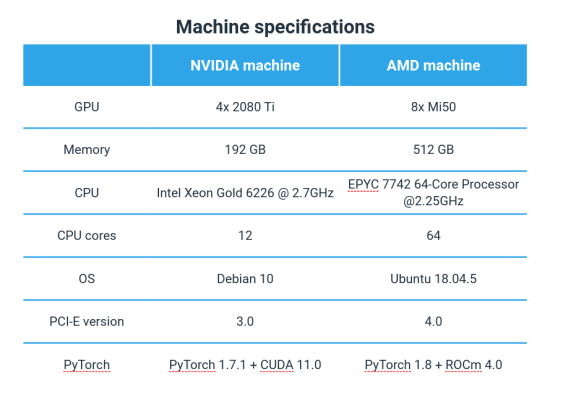

We perform benchmarks on two different machines, one with 4x RTX 2080Ti and one with 8x AMD MI 50. The specifications of the machines are shown below.

We compare a number of image classification models in FP32 precision on 1, 2 and 4 GPUs. Specifically, we conduct experiments on:

For more than one GPU we use torch.nn.DataParallel. Other distribution strategies are also interesting to investigate, but not part of this blog post. We measure the training performance in terms of images/second, which we calculate by averaging over 9 trials. To prevent data I/O for being a bottleneck, we use synthetic data. Lastly, we repeat all our experiments using mixed precision training using torch.cuda.amp.autocast, which casts certain operations to FP16 precision to speed up training. Our code is available here. Other hyper parameters that may effect the throughput are provided in the code.

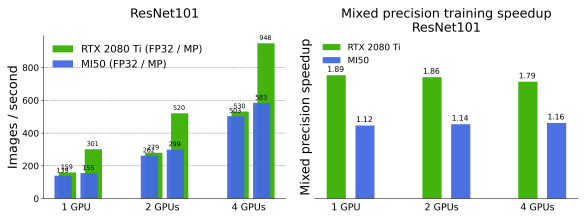

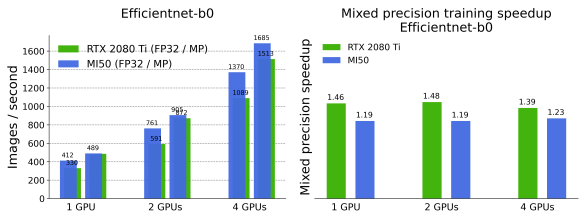

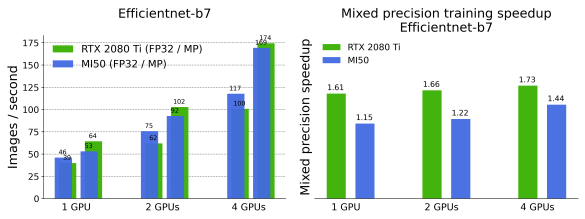

We present results the training speed in images/second on the different models and the speedup factor achieved when training in mixed precision.

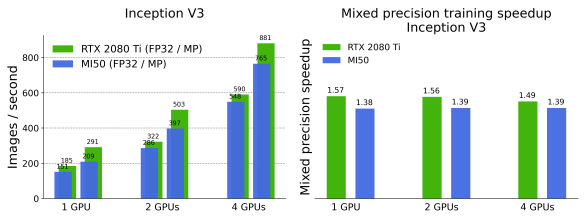

InceptionV3 is a popular choice in image classification models. It is regarded as efficient, both in terms of number of parameters and memory overhead. It features factorized convolutions and an auxiliary classifier.

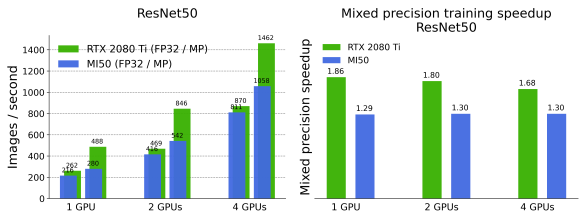

ResNet models are also a very popular choice for image classification tasks. ResNet introduced the residual connection, where (a number of) a weight layer is bypassed to mitigate the vanishing gradient problem. ResNets come in various forms and shapes, but we consider only ResNet50 and ResNet101.

Efficientnet models scale the depth, width and resolution of the network layers in a compound manner. They come in various sizes, but we investigate only the smallest (efficientnet-b0) and one of the largest (efficientnet-b7) model.

For FP32 we see that it depends on the model architecture which hardware is faster. For InceptionV3 and the ResNet models, training on RTX 2080 Ti yields higher throughput and lower training times. However, for Efficientnet models, training on a MI50 is up to 28% faster than on a RTX 2080 Ti. This is quite surprising and we can not give an answer to why this is the case.

Generally speaking, mixed precision training is never faster on the MI50 GPU than on the RTX 2080Ti. The speedup factor gained by switching from FP32 to mixed precision is much higher when using the RTX 2080Ti. This probably has to do with the availability of Tensor cores on the RTX 2080Ti. Tensor cores are optimized for fast matrix multiplications and additions, which speed up machine learning pipelines. Although there is some speedup in mixed precision training on AMD hardware, it is not as significant as on NVIDIA hardware.

In our opinion, the results are a bit surprising. We had not expected the MI50 to outperform the 2080 Ti, but we have shown that for some neural network architectures the MI 50 is the faster option. The availability of PyTorch with a ROCm backend is a potential game changer for the GPU-for-ML market, breaking the monopoly NVIDIA has had for over a decade. It used to be the case that only NVIDIA works out of the box, but we have shown that is no longer true.

We would like to thank all our colleagues from SURF for providing feedback on our plots. Experiments on the NVIDIA platform were conducted on the Lisa cluster computer. Experiments on AMD hardware were conducted on the Kleurplaat system within SURF Open Innovation Lab.

High Performance Machine Learning consultant bij SURF in Amsterdam Meer over Joris Mollinga

0 Praat mee