As applications or systems that use AI methods are deployed, it is important to understand the underlying basis for their output. This allows users to understand the behavior of the system, for example why a certain image is classified as a cat or a dog, or a tumor as benign or malignant. While some AI methods are directly interpretable and make this easy, the most striking successes have come from so-called 'black-box' methods, which are so complex that it becomes almost impossible to understand how they reached their results by simple analysis.

In addition, understanding what happens in the black-box is not only important to trust the output of a system, but also gives the necessary insights for accountability and for detecting adverse effects such as bias and nonfactual information. For instance, studies have shown that finetuning a language model for a medical problem brings a lot of benefits, even though that model was initially pre-trained on a wide variety of internet data that had little to do with medicine. However, through a more in-depth analysis of the finetuned model later [Zhang et al 2020 ACM], it was found that the model had racial biases that could have been detrimental for certain medical tasks. Finding this racial bias was not so obvious due to the black-box nature of the model.

In these medical cases where AI promises to support practitioners and therefore influence decisions, it is especially crucial to understand what is happening within the black-box. In this article, we highlight mainstream explainable AI (XAI) methods, potential pitfalls and discuss trends we are observing. The goal is not to provide technical details about how those methods work (there is already plenty of resources for that), but rather to discuss what their strengths and limitations are in actual use cases.

XAI and the case of the wolf

XAI methods are designed to give insights into how models produce their output. For example, querying complex models to highlight how input features were processed in various parts of the model to reach a given outcome. With this information, a knowledgeable user would be able to reach a certain level of understanding of why the algorithm behaves as it does, which in turn helps develop the necessary trust a user needs to have in the output of a system.

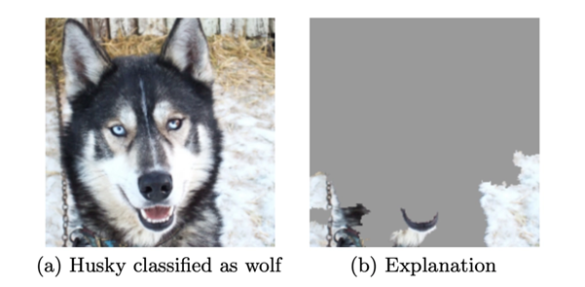

Take the example of an AI model predicting dog breeds from images. This is a simple problem where the AI model makes few mistakes. Nevertheless, in some images, the model was mistaking huskies for wolves which at a first glance would make sense because huskies have the appearance of a wolf. In this case, the inaccuracy could be easily explained away. Yet, after deployment of an XAI method, it became obvious that the model was not focusing on the husky itself to produce the result. Figure 1b shows the pixels important for classifying this image as a wolf. The XAI method helps us to realize how the model focuses on the snow, instead of the dog features as we would have expected.

In principle, such XAI methods are helpful when there is clarity about what constitutes a reasonable explanation such as the husky vs. wolf case. The method is only providing a glimpse into what parts of the input it deems important. The interpretation of the explanation is left to us. But what happens when the situation is murkier? What happens when the images are not so obvious to interpret?

The medical example

Imagine an AI system supporting medical specialists in complex tasks, like analyzing tissue biopsies for the presence of cancerous cells. Within the ExaMode project, histopathology data is combined with pathologists’ reports to train a system to recognize precursor lesions for adenocarcinoma using multiple data types (multi-modality). Because of the medical nature of the case, robustness of the system is critical, and the system must be trustworthy for the medical practitioner. The system needs to be inherently explainable; this is not a trivial ambition in a domain that is not easily interpretable.

In the ExaMode project we used so-called whole-slide images (WSI) that are linked to textual diagnostic reports to learn a useful neural representation. The learned neural representations can be used for many downstream tasks like text or image generation. It is a first step for the model to understand a certain dataset without a lot of explicit supervision.

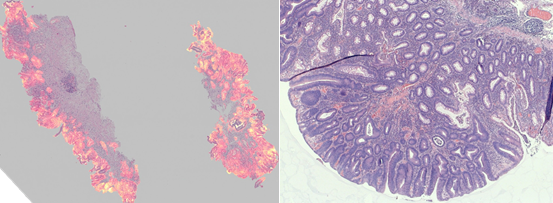

Histopathology images are hard to interpret as a pathologist needs several years of training to find the areas within the tissues that could be indicative of a lesion. A pathologist should be able to specify which regions of the WSI were resolute for diagnosis. Finding the correct morphologies in the WSIs is key in knowing whether a patient is progressing to cancer and to refer that patient for the appropriate treatment. In addition, it should be emphasized that there is not always a hard consensus among pathologists which parts of the WSI are important for the diagnosis.

For this reason, deploying an XAI method that highlights the importance of various regions in a WSI would come with the additional complication that the source data is prone to a wide variety of interpretation. In this case, just highlighting the regions that were important for the prediction does not hold sufficient explanatory power because any person would interpret the given explanation in a different way. For example, the highlighted pixels could be chosen due to an insignificant color difference, or due to noise such as a non-relevant artefact from the scanning procedure (Figure 2). This opens the door for the explainability of the explainability method! Lack of clarity makes it hard for medical practitioners to use the output of such an XAI method in their diagnoses.

Discussion

The problem emerges at the intersection of robust model engineering (does it do what we think it does?), epistemology (does it mean what we think it means?) and the medical practice (how does this information fits within my diagnostic procedure?). This raises the question of what specifically needs to be explained and how. What constitutes a good explanation and what is being explained?

It is not yet clear cut whether there will be general XAI methods or whether we always have to consider the specific context of the domain and the logical step that the AI methods solve. This is an ongoing field of research and researchers at several institutions are actively working on it. Babushkina et al. (2022) from U Twente approach this problem from an epistemological perspective, while Balayn et al. (2021) from TU Delft consider how semantic concept can provide an intermediary layer to support explainability.

We believe that approaches at the intersection of computer science and philosophy can help address the questions raised in this article and we are actively collaborating with both fields in the hope of getting us closer to "trustworthy AI". Do you recognize those questions as well? Do you work on similar use cases either from the epistemology side or from the AI user perspective (or at the intersection of both already!) and want to help us think through this issue? Please contact us!

This article has been written by Matthieu Laneuville, Bryan Cardenas Guevara and Duuk Baten.

Bibliography

- Babushkina, D., & Votsis, A. (2022). Epistemo-ethical constraints on AI-human decision making for diagnostic purposes. Ethics and information technology, 24(2), 1-15

- Balayn, A., Soilis, P., Lofi, C., Yang, J., & Bozzon, A. (2021, April). What do you mean? Interpreting image classification with crowdsourced concept extraction and analysis. In Proceedings of the Web Conference 2021 (pp. 1937-1948)

0 Praat mee