Vivian van Oijen

Ik hoor bij het High Performance Machine Learning team van Surf, waar ik… Meer over Vivian van Oijen

Ever since the release of ChatGPT, people have been amazed and have been using it to help them with all sorts of tasks, such as content creation. However, the model has faced criticism: some are raising concerns about plagiarism for example. AI-generated content detectors claim to distinguish between text that was written by a human and text that was written by an AI. How well do these tools really work? According to our findings they are no better than random classifiers when tested on AI-generated content.

There are more concerns than just the performance of these tools however. For one, there is no guarantee of avoiding false positives. Wrongfully accusing someone of plagiarism would be especially harmful. Then, it seems likely that this will turn into a game of cat and mouse with language models and tools promising to detect them continually trying to outdo each other. All in all, detection tools do not seem to offer a very robust or long-term solution. Perhaps it would be better to include the impact of artificial intelligence in the existing discussion about the best way to design exams and assignments to test students.

We compare the following AI-generated text detectors that are freely available:

Of course there are more of these tools, but we decided to randomly sample a few of them. They are listed in alphabetical order.

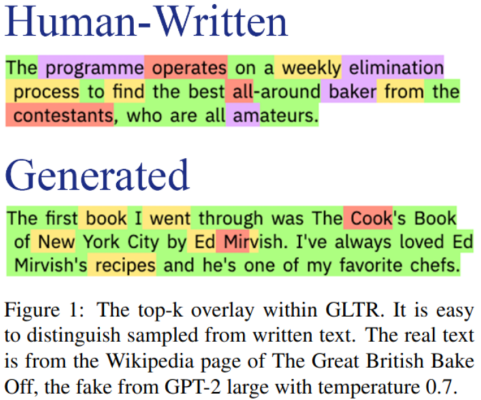

Apart from these AI Content Detectors, we also delve into GLTR (https://arxiv.org/abs/1906.04043). Unlike the former, GLTR does not classify a text as "AI" or "not AI"; instead, it provides a visualisation of the likelihood of each word in the text appearing as it does. The makers describe GLTR as “a tool to support humans in detecting whether a text was generated by a model.” It does this by using existing language models GPT-2 and BERT to calculate the statistical probability of each word in the text appearing at that position based on the remaining text. Language models use statistics and favour high probability word sequences, which is not how humans write. This is why human texts seem to have more randomness, while AI-generated text tends to be statistically likely.

We test each tool’s performance in detecting AI-generated text. The tools classify input text either with a label or with a score between 1-100. The labels we use are “AI”, “human” and “unclear”. We ask ChatGPT to generate text for each of the following prompts and then have each tool classify it:

These prompts include a variety of elements, including requests for factual information, rewrites of existing text, fictional or hypothetical scenarios, advice, explanations at different levels, and an impersonation of a specified character. We also include a Dutch translation of one of the prompts.

As part of our experiment, we are interested in how well tools classify human-written text. Not all errors are equally bad. A false positive where someone is incorrectly accused of plagiarism would be much more harmful than a false negative where a case of AI-generated text slips through. For our experiment we take the following texts as examples of human-written text:

Let’s take a look at the results, starting with the AI-generated texts. The tables below show the results per tool as well as per prompt. For these two tables the correct answer is always “AI”, which was given 19 times out of 68. That is an overall accuracy of 27.9%, while the best performing tool reaches a maximum of 50% accuracy (i.e., no better than a coin toss).

|

Tool |

#AI |

#Human |

#Unclear |

Accuracy |

|

Content at Scale |

0 |

3 |

7 |

0% |

|

Copyleaks |

4 |

3 |

2 |

44% |

|

Corrector App |

5 |

4 |

1 |

50% |

|

Crossplag |

3 |

7 |

0 |

30% |

|

GPTZero |

3 |

1 |

6 |

30% |

|

OpenAI |

4 |

1 |

5 |

40% |

|

Writer |

0 |

3 |

6 |

0% |

|

Total |

19 |

22 |

27 |

28% |

Table 1. Each tool’s prediction over the 10 example prompts described above. The Writer and Copyleaks tools only have 9 results, since they refused the Dutch prompt.

|

Prompt |

#AI |

#Human |

#Unclear |

Accuracy |

|

1.1 |

3 |

1 |

3 |

43% |

|

1.2 |

0 |

5 |

2 |

0% |

|

1.3 |

3 |

2 |

2 |

43% |

|

2 |

2 |

1 |

4 |

29% |

|

3 |

1 |

4 |

2 |

14% |

|

4.1 |

2 |

1 |

4 |

29% |

|

4.2 |

4 |

0 |

3 |

57% |

|

5.1 |

3 |

0 |

4 |

43% |

|

5.2 (Dutch) |

1 |

4 |

0 |

20% |

|

6 |

0 |

4 |

3 |

0% |

|

Total |

19 |

22 |

27 |

28% |

Table 2. Predictions per prompt.

Most of the texts we generated were either incorrectly classified as human text or some variation of “possibly AI”, “Might include parts generated with AI” and “unclear”. It is worth noting that tools do not report their results the same way; some give percentages and others use words such as “likely”, “possibly” and “unlikely”. We have to interpret the results a bit in order to compare them. We choose to do this in a way we imagine a teacher might when using these tools to help detect usage of AI in students’ homework, for example. That is, the tool needs to be reasonably sure of its classification before we take it seriously. That’s why we interpret any result with words like “possibly” or “might” as “unclear”. For percentage-based results, we only considered scores of 80% or higher as certain.

One prompt was in Dutch. Most tools gave a warning that they have no support for languages other than English, but some classified the text anyway. In all these cases, the text was classified as human. Other tools gave no result at all. The only tool that actually classified the Dutch text correctly was the one by OpenAI.

Prompts 1.2, 3 and 6 are especially difficult for the tools to classify. These are the prompts where ChatGPT is asked to either rewrite a human-written text or write something in a specific style. In addition, for prompt 4 we explicitly ask ChatGPT to write more “human-like”, but that description alone does not seem to help. We include a single one of these prompts for the sake of brevity, but actually play around with the prompt a bit more. We make suggestions such as exaggerating the characters and adding certain paragraphs, but get mixed results.

Next, let’s see how well the tools do with human-written text. Again, we show the results per prompt and per tool. For these two tables the correct answer is “human”, which is given 29 out of 35 times. Even when the result is not “human”, it is never “AI” either. Sometimes it is “unclear”, but there was not a single false positive!

|

Tool |

#AI |

#Human |

#Unclear |

Accuracy |

|

Content at Scale |

0 |

3 |

2 |

60% |

|

Copyleaks |

0 |

5 |

0 |

100% |

|

Corrector App |

0 |

5 |

0 |

100% |

|

Crossplag |

0 |

5 |

0 |

100% |

|

GPTZero |

0 |

3 |

2 |

60% |

|

OpenAI |

0 |

4 |

1 |

80% |

|

Writer |

0 |

4 |

1 |

80% |

|

Total |

0 |

29 |

6 |

83% |

Table 3. Each tool’s predictions for the examples of human-written text.

|

Prompt |

#AI |

#Human |

#Unclear |

Accuracy |

|

1.1 |

0 |

5 |

2 |

71% |

|

1.2 |

0 |

4 |

3 |

57% |

|

2 |

0 |

6 |

1 |

86% |

|

3 |

0 |

7 |

0 |

100% |

|

4 |

0 |

7 |

0 |

100% |

|

Total |

0 |

29 |

6 |

Table 4. Predictions per text.

Lastly, the results from GLTR. In this figure we show the “top k overlay” made by the GLTR tool for prompts 1.1 and 4.1; the colours show if each word is in the top 10, 100 or 1000 most probable words or even outside of that. Red and purple are more random than green and yellow. We see that the human text (from Wikipedia and a SURF report) contains significantly more red and purple than the AI text does.

This tool does not classify whether something was generated by a language model or not, but it might help you detect AI-generated content. According to the research paper, people who used GLTR were able to detect fake text with an accuracy of over 72%, as opposed to the 54% accuracy people without GLTR obtained. The highest accuracy we saw in the tools we compared for this article was 50%, much lower than 72%. Do keep in mind though that GLTR was published in 2019, before ChatGPT or even GPT-3, and uses older models. It may be less effective on the most recent language models.

AI detection tools are sometimes effective, but far from perfect. It seems to be quite tricky to determine whether a text was written by a human or generated through a language model, which shows how far language models have come.

Language models can be very helpful. They are just another tool to be used and can (partially) automate more tedious tasks, such as structuring documents, writing simple code, summarizing text and even extracting data from text. Apart from automation they can act as an assistant or second pair of eyes that can give feedback on texts you wrote and help with debugging code.

On the flip side, they can also carry the risk of misuse. Plagiarism is an example that people have raised concern about, but entering sensitive data into a public API or blindly assuming that AI-generated text is factually accurate are also potential problems.

People need to be educated on how to use language models responsibly and misuse should not be tolerated. Just like using any other resource on the internet to find and use information is not inherently bad, but blindly copy pasting and not citing where your information is from is misuse.

Detection tools like the ones we include in this article can be useful in preventing misuse, but they seem to be behind language models. However, the fact that they can detect AI in some cases, as well as the results from GLTR, suggest that there do exist detectable differences between AI-generated and human-written text. Now that the use of language models is getting more attention and has people concerned, these tools might develop more and get better. That being said, the goal of language models is to model language as human-like as possible. Therefore, the task will only get more difficult as these models get better. This makes it that much more important to teach people to use language models responsibly.

As we said in the introduction, we sampled a few of the tools that are available. These might improve in the future and more tools might come out in the future. If you want to evaluate a tool yourself you can simply repeat the experiment we did here!

You will need to choose some prompts. You could use the same ones we did, or you can make up your own. Whatever you choose, make sure that you include several elements. Some examples of various challenges to include are factual essay writing, creative writing, writing code and writing in a specific style.

Generate text for each prompt using AI. You can go to ChatGPT and have it generate text for each of your prompts. Instead of ChatGPT you can use any other language model of course, though most do not have such a user-friendly interface.

Feed each of the texts to the tool you are testing and count how often it classifies your texts correctly as AI. Additionally, play around with the text in step 2. change some words, add your own paragraph, translate it to another language and back. Ask the generating tool to change the style of the text (“write like a student”, or “write like an expert”). Test if this slightly different text is still recognized as AI.

Don’t forget to also try some human texts! You do not want to be using a tool that is likely to classify human-written text as AI-generated.

Happy testing!

Ik hoor bij het High Performance Machine Learning team van Surf, waar ik… Meer over Vivian van Oijen

Dit artikel heeft 2 reacties

Als lid van SURF Communities kun je in gesprek gaan met andere leden. Deel jouw eigen ervaringen, vertel iets vanuit je vakgebied of stel vragen.

Nice article, interesting results. Turnitin just released a preview of their detection methods on AI writing in their products. Would be interesting to see how Turnitin performs on your testdata.

Is accuracy really the right metric for such an assessment? In reality we're likely dealing with highly unbalanced classification (e.g. ~5% true positive rate). Moreover, false positives would be much more damaging than false negatives, since it's a huge problem if we incorrectly accuse students of fraud. So why measure them by their accuracy scores, rather than their precision and FPR? (And the class imbalance should of course reflect the expected reality.)

2 Praat mee