Generative AI (GenAI) will have a major impact on the future of programming and computer science, as well as computing education [1]. At the Department of Information and Computing Sciences at Utrecht University (UU), we are running a project to investigate this impact within our study programs and gather knowledge on how teachers could deal with the threats and opportunities of GenAI.

To better understand the values and experiences of our department’s teachers regarding GenAI, we distributed a survey in the Fall of 2023. This survey was largely based on a survey conducted among 57 computing teachers from 12 countries (USA, UK, Canada, Jordan, Pakistan, and others) in the summer of 2023 [3], which allows us to compare the results to international perspectives. In this article, we present the findings of our survey and compare them with those of computing educators worldwide.

Teachers and students at the UU computing program

The Department of Information and Computing Sciences at Utrecht University offers Bachelor and Master’s programs to over 2,000 students. These programs cover disciplines such as Computing Science, Information Science, Game and Media Technology, Data Science, Human-Computer Interaction, Business Informatics, and AI. In total, 41 teachers from these programs responded to our survey. They have an average of 13 years of teaching experience (between 1 and 42 years) as lecturers, PhD candidates with teaching tasks, or coordinators, and teach in classes of varying sizes.

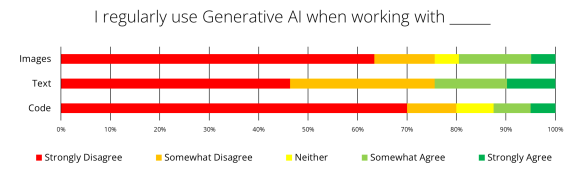

First, we asked the teachers if they regularly use GenAI tools themselves (see Figure 1). Nearly a quarter of teachers (24%) somewhat or strongly agreed that they use it for text, fewer teachers (20%) generated images, and the smallest percentage (12%) used GenAI for code. Overall, it seems that UU teachers are not avid users of GenAI (yet); these percentages are smaller than those from the international survey, of whom 32% used GenAI for text, and 23% for code.

Student’s behaviour

Several teachers mentioned having observed students using GenAI. Many of these examples are about using GenAI for text, such as writing (parts of) a thesis, a job application letter, and other assignment texts. Some also mentioned how they recognize this, for example, because the text is very generic or overly formal, using specific words like ‘however’ and ‘delves.’ Teachers also noticed that students use GenAI for code (ChatGPT and Copilot), such as for debugging (‘works well enough’), learning a new language or framework (in a project course), or just generating code (snippets).

Teaching practices

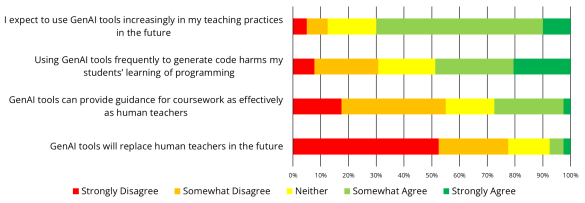

We presented teachers with several statements regarding the use of GenAI in their teaching (see Figure 2). In reaction to the statement I expect to use GenAI tools increasingly in my teaching practices in the future, the majority (70%) of teachers somewhat or strongly agreed, similar to CS teachers internationally. About half of the teachers (49%) were of the opinion that the frequent use of GenAI tools to generate code is (somewhat) harmful to their students’ learning of programming; only 40% of international teachers responded the same. More than a quarter (28%) agreed that GenAI tools can provide coursework guidance as effectively as human teachers, but more than half (53%) somewhat or strongly disagreed with this statement. Only 8% believed that GenAI tools would replace human teachers in the future, lower than the percentage of respondents in the international survey, at 14%.

When (not) to allow GenAI

Almost all teachers (92%) stated that GenAI should be allowed in some programming assignments and disallowed in others (based on the assignment type, course level, etc.). When we asked them to elaborate on this, several teachers mentioned that it depends on the learning goals of the course. Introductory programming was mentioned multiple times as an example where GenAI should not be allowed as it hinders learning. Another teacher mentioned that it should not be used if some theory needs to be understood, or when learning how to develop algorithms. As one teacher stated: ‘Getting something to work is not the same as understanding why it works.’

Many teachers, however, acknowledge that GenAI is here to stay. Teachers repeatedly mentioned that GenAI can be used ‘as a tool’ for support and learning. They named specific courses and topics where they think GenAI may be useful, such as software engineering project courses, data science courses in which the programming is application-oriented, a data structures course for the development of snippets (but not for the structure or algorithm itself), or just for programming in practice outside of the university setting.

Other responses focused on specific tasks. Some teachers mentioned that GenAI use is fine for coursework and assignments and for simple or repetitive tasks such as checking grammar, generating starter code, and helping with a literature review. However, some say it should not be allowed for exams, writing, or other ‘core’ activities. Concerns are raised when using GenAI to generate code because it cannot be guaranteed that it’s correct; there are copyright issues, and the generated code might not match the student’s level. It is also mentioned that students should be taught to critically evaluate the GenAI output and why certain skills need to be learned without relying on GenAI. As one teacher mentioned, it would be good to distinguish between different types of AI tools. For example, using an AI grammar checker differs greatly from using an AI chatbot such as ChatGPT, which might come with different advice on its allowed use.

How to use GenAI

We asked how teachers currently use GenAI tools for text generation within computing courses; this was answered by less than half of the teachers, of which some indicated they do not use it for that purpose. Text/grammar improvement, in general, is mentioned a few times, but some more specific use cases were given. One teacher provides prompts that students can use on their own documents before they submit them. An example of such a prompt given by this teacher is ‘Do the research methods described in this thesis proposal serve to answer the following research question?’. Other concrete use cases are ‘to find edge cases for the assignment’ and ‘generate user scenarios to better understand the context of the assignment’.

Asking how teachers use GenAI for code generation led to only a handful of answers. One teacher advises students to use it for very specific coding tasks and compares it to using code from an API. Another teacher says ‘it will free me from resolving language-specific questions,’ ‘my assignments are typically formulated in such a way that thinking of a solution is the hard part,’ and GenAI ‘is not coming close.’ Only one teacher mentions using it to generate teaching materials, such as programming-related exercises and their solutions, and code examples for their lectures.

Changes to teaching and assessment

Some teachers indicated that they have already made, or plan to make, changes to their teaching approaches. Some have changed their exam questions or assignments to make them unsolvable by AI. Other teachers follow a different approach, incorporating AI theory and explaining how to use GenAI properly. Other examples of specific use cases include employing GenAI for brainstorming, finding bugs in code, requirements specification, and several more course-specific tasks. However, many teachers state they have changed nothing or very little (e.g., ‘Nothing made, nothing planned’). This is for various reasons, such as GenAI's inability to solve questions or help in their course.

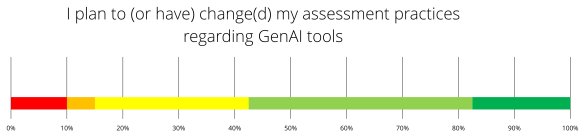

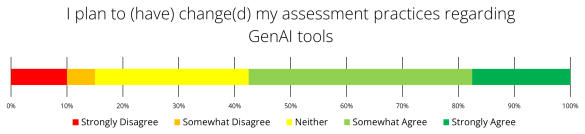

We also asked teachers if they plan to change (or have changed) their assessment practices due to GenAI, to which 58% responded positively and 15% responded negatively (see Figure 3). In addition, we asked them to describe the (planned) changes. Three teachers indicated that they were not planning to make changes because they do not see GenAI as being capable of helping students in their courses. A small group of teachers indicated that they wanted to check for the use of GenAI by having one-to-one interviews or presentations or by incorporating reflection on its use in assignments. Other changes described by the teachers included decreasing the weight of assignments that could be easily solved using GenAI and emphasizing closed-book and on-campus testing more. One teacher used GenAI to generate extra feedback for large documents and added it to their own feedback with a note (‘This is what ChatGPT came up with’). One teacher instructed the TAs to check the code and report ‘suspicious code’, and another changed the assignments to be unsolvable by AI.

Finally, only a few teachers responded to our question on how successful their implemented changes were, and they mentioned something like ‘too early to say’. The teacher who mentioned teaching prompt engineering said that ‘students like it a lot’ and that they ‘see the benefit for their future career’.

Policies and ethics

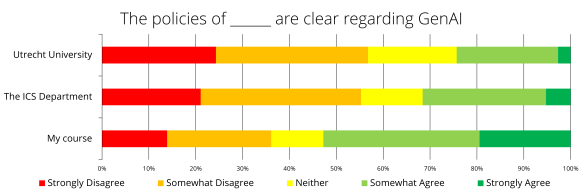

We gave teachers several statements on how clear the policies are when it comes to using GenAI at different levels (see Figure 4). Most teachers (57%) said they do not think the rules at the university are clear, and about the same number, 55%, feel the same way about the rules in their department. But when it comes to their own courses, the trend is inverted; 53% of the teachers somewhat or strongly agree that their course’s rules are clear. Compared to the international survey, where only 12% think university rules are clear and 44% feel the same about course rules, our teachers see both sets of rules as somewhat clearer. Additionally, we asked them if there should be no restrictions on GenAI use. Regarding this, 76% reacted negatively and only 11% positively. This is more cautious than the international findings, where 68% opposed and 16% supported. However, to the question about whether they have explicit policies in their syllabus about when it is allowed to use GenAI, only 38% said yes.

We asked teachers to share the statements they use about when it’s acceptable to use GenAI. Only a limited number responded. Three teachers clearly state in their policies that using GenAI is prohibited and constitutes fraud. A teacher used the metaphor of a ‘cigarette’ to highlight the potential harm of tools like ChatGPT for learning to program. These tools look cool initially, but they can cause ‘more harm than good’. Some teachers allow GenAI for simple tasks (‘basic code generation or for grammar checks,’ ‘find examples and generate summaries’). One teacher does not mention GenAI specifically in their policy but refers to including citations of external code. Only one teacher reported allowing its use for ‘whenever needed’. Finally, a teacher wanted to see examples of good statements.

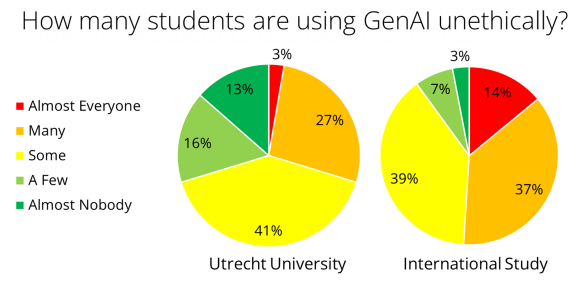

Regarding ethical use, we asked teachers if they think students use GenAI tools in ways they would consider unethical; these results are visible in Figure 5-left. Only 3% of teachers believe almost all students use GenAI inappropriately, but 68% think that some or many students use it unethically. On the other side, 29% of the teachers are more hopeful, believing that only a few or no students use the tools unethically. Compared to the international study, teachers at Utrecht University tend to believe that fewer students use GenAI unethically (see Figure 5-right).

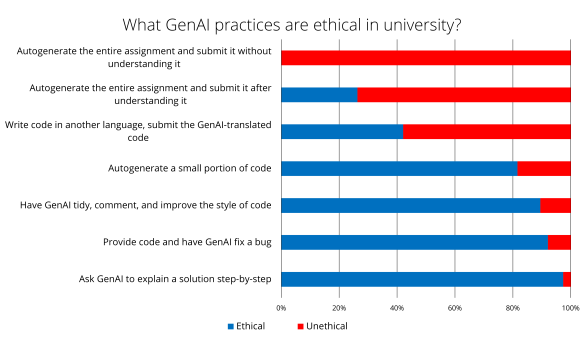

We also presented teachers with different ways of using GenAI and asked them to select the ones they consider unethical without an explicit course policy (see Figure 6). All teachers agreed that auto-generating and submitting the entire assignment without understanding it is not ethical. 74% of teachers also think it’s wrong to do the whole assignment with GenAI, even if students understand it before handing it in. Writing code in another language and submitting the GenAI-translated code is inappropriate to 58% of the teachers. However, a vast majority (82%) think that auto-generating a small portion of code is ethical. Similarly, 89% of the teachers do not see ethical problems with using GenAI to make the code look better and to add comments. Most teachers (92% and 97%) believe it’s fine to use GenAI to fix user-provided code or to explain a solution step-by-step. These results are comparable to those of the international survey. Finally, only three teachers (8%) think that they can detect code written by GenAI tools, and only nine teachers (23%) actively check for unauthorized use of GenAI tools.

Looking into the future

While some teachers have taken immediate action, several have been waiting and observing. In this last section, we look at the concerns that might arise and investigate teachers’ ideas about changing the curriculum in the long run.

Teacher’s concerns

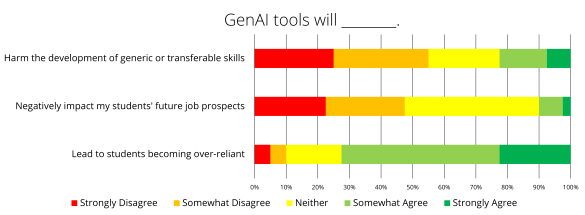

We presented teachers with several statements regarding concerns for the future; the responses are shown in Figure 7. Most teachers (73%) were worried to some degree that their students would become over-reliant on GenAI tools. Few teachers thought that GenAI tools would negatively impact their students’ future job prospects, although almost half were undecided. Teachers had varied reactions to whether or not GenAI tools would harm the development of generic or transferable skills, such as teamwork, problem-solving, and leadership.

Changing the curriculum

We asked teachers if students must be taught how to use GenAI tools well for their future careers; most agreed, only one teacher disagreed, and four (10%) neither agreed nor disagreed. In addition, we asked if teachers plan to change (or have changed) their curriculum, to which 38% replied positively and 43% negatively (see Figure 8). In addition, we were interested in what new content they think should be taught, and which courses should be added to the curriculum. The most common answers were to start teaching how to use GenAI, including prompt engineering, offering theory on how AI works, its limitations and ethics, and critically evaluating output. One teacher would want to focus more on ‘understanding, checking/fixing existing code, and analyzing the runtime behavior of programs’. Another teacher who mentioned being a ‘techno-optimist’ noted that GenAI should be incorporated into courses, not added as a new, separate aspect. Another teacher questioned why a MOOC combined with an AI-tool ‘is not a full replacement for pursuing a BSc/MSc at our university’. We should consider ‘the qualities that we think students miss out on’ and ‘emphasize those, while embracing that we do not have to teach them programming in the level of detail that we previously deemed necessary’.

Conclusion

The survey results show that the teachers at UU who responded are actively thinking about how and when (not) to incorporate GenAI. There are some skeptical views on GenAI’s capabilities and concerns about students misusing and over-relying on these tools. In contrast, there are numerous ideas on how to employ GenAI to give students extra support and to use it to their advantage. However, few teachers have started implementing changes, and if they have, it is still early to say something about the consequences. We have to note that our survey was conducted in the fall of 2023. Although GenAI tools had already been available for a while at that time, the rapid developments in this area may have inspired teachers to change their opinions or introduce new practices since then. We plan to follow up on this survey to determine what these changes might be.

While many of the concerns identified in the literature were also raised by UU teachers in this survey, they did not touch on all documented opportunities for GenAI [1]. For example, teachers hardly mention the possibility of using GenAI to help generate course materials, such as multiple-choice questions and programming exercises. Also, specialised tools based on AI were not mentioned; however, these are mostly still in their experimental stages.

Further reading

Other international studies have focused on the teacher’s perspective through interviews. A series of interviews with 20 teachers [2] focused on pedagogical approaches over the short-term and long-term if GenAI could hypothetically solve all programming exercises undetected. Another study investigated the concerns and expectations of teachers and students [4]. More resources and results from our project will be published on our website.

The project team members are Hieke Keuning, Ioanna Lykourentzou, Sergey Sosnovsky, Isaac Alpizar-Chacon, Imke de Jong, Christian Köppe, Georg Krempl, Matthieu Brinkhuis, Dong Nguyen, Albert Gatt, Lauren Beehler, and Bas de Boer.

0 Praat mee