In the ORI community we often talk about the why of Open Research Information: better metadata, stronger PIDs, more transparency, less dependency on closed platforms.

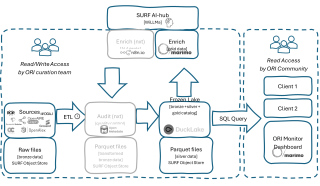

This is a short update on the how—because we’re actively experimenting with a concrete stack that can power a future ORI Monitor and, more broadly, a national analytics layer for open research information.

The premise is simple: the quality of open research information is under pressure—metadata is incomplete, PIDs are missing or inconsistent, licensing is not always clear, and keeping CRIS and repository records aligned still costs a lot of manual effort.

At the same time, a wave of open technologies is finally making a different approach technically and practically achievable.

What we’re trying to enable

Our immediate goal is very concrete: a shared infrastructure that can help us build ORI dashboards such as:

- PID adoption dashboards (DOI, ORCID, ROR, GrantDOI)

- quality & completeness metrics for any metadata field

- license visibility and reusability metrics

And if that base works well, it can extend to things like AI-assisted classification (document types, affiliations, topics), metadata generation from open access PDFs, and even cross-institutional open access monitoring.

The breakthrough layer: Frozen Ducklake

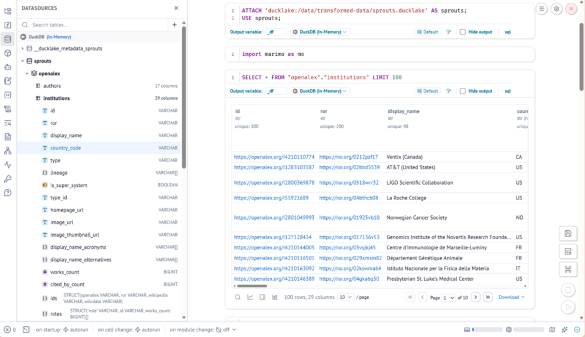

The heart of the experiment is something we call a Frozen Ducklake: a data lake that stores datasets as Parquet files on object storage, while still being fully SQL-queryable using DuckDB—without running “always-on” database servers.

In practice, we’re aiming for a layered setup:

- Bronze: raw snapshots (e.g., OpenAlex, OpenAIRE, ROR, OpenAPC, Crossref)

- Silver: transformed Parquet datasets produced via ETL pipelines

- Ducklake catalog: automatic table discovery, versioning, and metadata registration

- SURF Research Cloud + S3-compatible storage as the scalable infrastructure base

Why we’re excited about this for ORI work:

- no database servers → fewer locks, bottlenecks, and cost surprises

- fast SQL analytics with DuckDB, locally or in the cloud

- reproducible pipelines are natural (data snapshots + query logic)

- “open by design” aligns with FAIR, sovereignty goals, and Barcelona-style commitments

The bigger idea: this can become a national ORI analytics backbone where institutions can plug in without losing ownership or control.

Further reading: Frozen DuckLakes for Multi-User, Serverless Data Access – DuckLake || Object Store | SURF.nl || SURF Research Cloud | SURF.nl

From data to insight: Marimo as the ORI notebook + dashboard engine

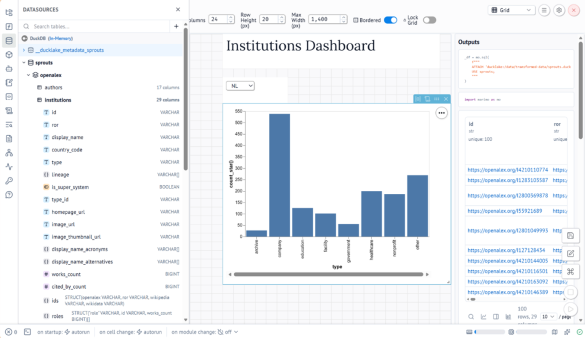

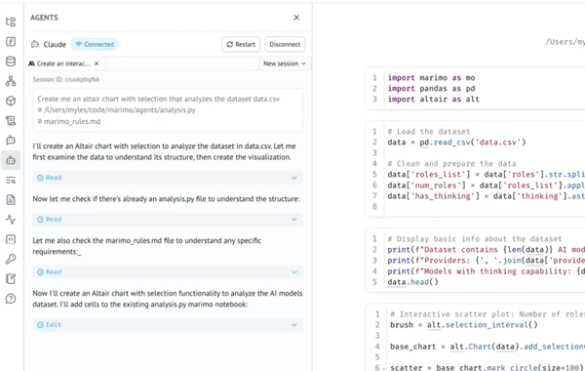

A data layer is only useful if the community can work with it. That’s why we’re using Marimo as the analysis and dashboard environment.

What makes it a good fit for ORI work:

- it combines Python + SQL in one flow (useful for mixing CRIS exports with OpenAlex/OpenAIRE datasets)

- it has an app-mode to publish interactive dashboards as read-only views

- it supports AI-assisted coding to generate queries and transformations faster (with transparency)

- it’s open source, unlike many commercial notebooks/dashboards

This is the layer where ORI monitoring becomes tangible: dashboards for PID adoption, metadata completeness, licensing, and more—without forcing anyone into a commercial ecosystem.

Further Reading: marimo | a next-generation Python notebook

Trust and governance: OpenMetadata as the “brain” around the lake

[nxt: optional expansion in broccoli]

As soon as datasets multiply and pipelines evolve, “just put it in a bucket” stops working. Governance becomes part of the product.

That’s where OpenMetadata comes in: it provides cataloguing, profiling, quality rules, lineage, and documentation—so we can track what a metric means and where it comes from.

In practice, it adds:

- automatic dataset detection & cataloguing

- profiling (nulls, completeness, distributions)

- data quality rules & observability

- lineage from raw → ETL → dashboard

- role-based governance and documentation

Why this matters for national ORI monitoring: it makes gaps visible to institutions, turns Barcelona-style commitments into measurable practice, and makes stewardship a shared process—not a local burden.

Further Reading: OpenMetadata: #1 Open Source Metadata Platform

Metadata enrichment: agentic AI workflows (carefully, and transparently)

[nxt: optional expansion in broccoli]

After storage + governance comes enrichment. Several Dutch pioneers are already experimenting with AI-driven metadata workflows, and we’re exploring how this could fit responsibly in an ORI context.

Concrete enrichment tasks AI can support include:

- extracting metadata from PDFs at scale

- normalizing authors or affiliations

- matching ambiguous organisation names to ROR IDs

- suggesting missing values and flagging anomalies

Tools we’re looking at include n8n (open automation with AI agents), CrewAI / Academic-Agents (multi-agent orchestration in Python), and (where relevant) Databricks AI functions.

A typical workflow could look like:

- CRIS exports provide partial metadata

- agents inspect PDFs and propose enrichments

- fuzzy matching suggests ORCIDs/ROR IDs

- a second agent validates confidence

- results are written back into the lake with lineage

This is explicitly not framed as “AI will solve metadata.” It’s about reducing repetitive work with auditable outputs. And it’s already being explored in places like RUG, WUR, and in the Broccoli proposal context.

Further Reading: n8n: AI Workflow Automation Platform & Tools || Academy - Federated Agentic Systems || CrewAI - The Leading Multi-Agent Platform

The stack, end-to-end

Here’s how the pieces interlock into one open ORI stack:

- Frozen Ducklake: core storage + SQL analytics layer

- SURF S3 storage: scalable object storage

- SURF AI-Hub: selected Open LLM API’s

- Marimo: notebook + dashboard engine

- OpenMetadata: governance, quality, lineage

- N8n AI workflows: extraction, matching, enrichment

The design goal is a federated, future-proof sovereign infrastructure, built around community governance rather than vendor lock-in.

How this relates to BROCCOLI (and why we’re moving a bit ahead)

This work does not stand on its own. It is deliberately aligned with the proposed BROCCOLI project, which focuses on improving metadata quality through enrichment, feedback loops, and shared interventions across institutions.

BROCCOLI is designed to start from a more programmatic and community-driven setup, with explicit funding and governance structures. What we are doing here is experimentally a step ahead: testing architectural choices, tooling, and workflows before BROCCOLI formally starts.

You can think of this work as testing the waters:

- validating whether a Frozen Ducklake + DuckDB stack is viable for national ORI analytics

- exploring how Marimo can function as an open, reproducible dashboard layer

- understanding what OpenMetadata adds (and where it adds friction) in a real ORI context

- experimenting with agentic AI workflows for metadata enrichment without locking them into a single institutional setup

These experiments are intentionally lightweight and reversible. They allow us to learn what works, what scales, and what does not, so that BROCCOLI can start on firmer ground—technically and organizationally.

Importantly, this also means we are not pre-empting BROCCOLI. We are not fixing architectures, indicators, or workflows in advance. Instead, we are creating informed options that BROCCOLI can adopt, adapt, or reject. The goal is to reduce uncertainty, not to narrow choices.

In that sense, PID to Portal, this “next-gen ORI infrastructure” work, and BROCCOLI should be seen as consecutive steps in the same trajectory:

- PID to Portal strengthens the chain and governance (from identifiers to portal)

- This experimental stack explores how monitoring and stewardship could work in practice

- BROCCOLI can then scale the successful patterns into a coordinated, funded intervention framework

If BROCCOLI proceeds, these early experiments will feed directly into its design—technically, methodologically, and culturally.

Further Reading: BROCCOLI sprouts: Full proposal submitted for Open Science NL Infrastructure call | SURF Communities

0 Praat mee