Impressive hardware

Years of secret development, culminating in Vision Pro, have produced an XR headset that contains quite a lot of hardware in a design resembling a pair of ski goggles. An Apple M2 processor, a 23 megapixel micro-OLED display (more than 3 times the number of pixels as a Meta Quest 2), pancake lenses reducing thickness of the optical stack, 12 cameras (for head, hand and eye tracking), six microphones, plus other sensors such as a LiDAR scanner for spatial mapping of the user's environment. A new dedicated "R1" chip is needed to process all the incoming data from the cameras and other sensors. Compared to current XR headsets from other vendors the Vision Pro hardware is quite impressive. The Vision Pro runs a dedicated operating system called visionOS, built on the same foundation as macOS, iOS and iPadOS (similar to what Microsoft did with Windows Holographic on the HoloLens 2).

Getting all hardware to fit in the headset must have been quite a challenge, with one of the outcomes that an external battery pack is used that is tethered to the headset with a cable, providing only 2 hours of usage. Although you can plug into the mains to get unlimited usage, there currently seems to be no way to use the Vision Pro without any cable attached (even though the launch video does show that in a few instances).

In terms of CPU and GPU power no hard numbers are available, nor exactly which variant of the M2 chip is included in the Vision Pro. Some earlier GPU benchmark results seem to indicate an M2 is capable of 4x the frame rate of a Snapdragon XR2 Gen 1 (which is what is used by Meta Quest 2), when rendering at 1080p. But then again, the Vision Pro has many more pixels to render compared to a Quest 2. Compared to the XR2 Gen 2 (expected to be used in the upcoming Quest 3) an M2 would still be 1.5x faster. However, using the maximum performance of a chip isn't always practical, due to power and heat issues. And in any case, these are just fun guessing exercises we can do until the first real comparisons come out, and so are to be taken with a grain of salt. All in all, the fact that Apple can produce its own silicon for its own devices will give it a great advantage, compared to the generic Snapdragon XR2 produced by Qualcomm which is used in a lot of devices from different vendors.

A passthrough headset

The Vision Pro is a passthrough device, meaning that what's shown on its displays inside the headset - and therefore what your eyes see - is based on video streams coming from cameras on the outside looking forward. The same technique is used by the Meta Quest Pro and Lynx R1. Since the displays can show anything, either the camera feeds from the front, a fully virtual world, or some combination of those two the Apple Vision Pro can be called a mixed reality device (but see the next section).

The field of view of an XR device greatly influences the experience and usability. No official FOV value was provided so far by Apple, but reviews indicate it to be somewhere in the range "not wider than Valve Index" to "roughly between Quest Pro and PSVR2". So it seems there's not a great step forward made in field of view with the Vision Pro.

Since cameras are the basis for the real-world view it remains to be seen how well the passthrough performs in challenging light conditions. For example, the Meta Quest Pro image can become really noisy when used indoors in the evening, when only relatively weak artificial lighting is available. However, the Vision Pro does feature "infrared flood illuminators" to improve hand tracking performance in low-light conditions, so that at least suggests those conditions are aimed for. The opposite case, outdoor use (even in bright sun light), was not shown at all in the launch video.

One nice feature for those wearing glasses is the possibility of using prescription inserts, instead of trying to fit the headset over your glasses (although the inserts might actually be expensive). This does make the device more personalized, but it seems to be single-user anyway, see below. But glasses inside a closed-off XR device have never been comfortable anyway. Plus it allows for a smaller design that's still "glasses inclusive" for those affected.

On top of the device is a small physical wheel, called the Digital Crown, which allows the user to choose the amount of blending between real and virtual worlds they see. Having such a physical means of controlling the amount of "virtuality" is an interesting addition and might indeed give the user a measure of control that lacks on other headsets. For example, on other headsets you usually have to exit the current fully immersive application with one or more actions, followed by enabling passthrough view (or double-tap the side of the headset, in case of the Quest) to see reality. Just having to turn the crown wheel to quickly switch over to reality might just be what is needed to make fully immersive experiences more acceptable, as the user stays in full control over when they want or need to see reality. The Digital Crown can also be double-clicked to activate the Optic ID scan to confirm payment when purchasing something, so it's not something that's only a gimmick.

Reverse passthrough

A really interesting feature is the so-called "reverse passthrough", or EyeSight as Apple calls it. On the front of the Vision Pro is another display, which is not meant for you as device wearer, but for those around you to observe. In situations where you want to engage with someone in your (real) environment the display can show the part of your face covered by the headset, allowing some form of eye contact without having to take the headset off. In situations where you are not observing the real world around you - say you're watching a movie in a virtual movie theater - the front display will not show your face, but some colored wavy patterns. This signals to those around you that you're immersed in a virtual world. But when there is someone in front of you that looks at your headset this will trigger that person to become visible to the headset user, by replacing part of the current immersive world with a real-world view.

The face shown on the reverse passthrough display is not your real face, by the way, but a rendering based on a 3D scan you make during "enrollment" when you first start using the headset.

All in all, reverse passthrough is an interesting addition, but we'll have to see if this gets broadly accepted in social terms, versus added device cost, and so might become a standard feature on (high-end) XR headsets. Even Meta had research prototypes of reverse passthrough a few years ago, so this isn't entirely a new idea. But Meta used cameras to show the user's actual eyes on the outside displays, and so far a similar feature hasn't made it into a Meta product.

Terminology

If you listened carefully to the Vision Pro announcement during the WWDC keynote you will have noticed Apple did not use the terms VR or XR at all (nor metaverse, but who still does?). CEO Tim Cook did use the term *augmented reality* when introducing the Vision Pro, mentioning "blending digital content with the real world" and calling the Vision Pro the first product of a new "AR platform". Indeed, some of the developer material presented at WWDC speaks of the possibility of the past years of already developing "AR apps" for iPhone and iPad.

However, near the end of the keynote Apple switches to the term *spatial computing*, calling the Vision Pro "our first-ever wearable, spatial computer [...] that blends digital content with your world". The term spatial computing isn't new, and was even used by Magic Leap and Microsoft already years ago when talking about their respective XR devices. It seems Apple wants to start fresh with "spatial computing" and ignore most other existing terms, like AR, VR and XR, while redefining a few others. The reasons are probably because the current terminology isn't well-defined and even ambiguous. Also, any association with Meta's use of AR and VR as marketing terms is probably being avoided explicitly, to keep the Apple's vision and the perception of its products clean.

Curiously, the name of the Vision Pro operating system, visionOR, was rumoured in the months before the release to be "xrOS". And indeed that name is still used in quite a few places, even in the developer material. So the rename to visionOS must have been a relatively late change (for unknown reasons).

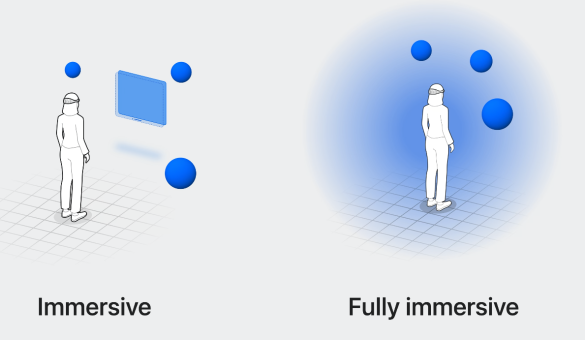

But using different terminology can actually cause more confusion (unless we all switch to the Apple terminology, of course). For example, "immersive environment" for most people working in XR so far meant fully immersive, i.e. VR. But Apple uses immersiveness as a scale, already calling a real-world view where a user sees only a small bit of virtual content in front of them already "immersive":

And so far such experiences would usually be called mixed or augmented reality.

Not directly related to XR, but also absent in the presentation, were the terms AI and Artificial Intelligence. AI was definitely part of the product announcements, but called "machine learning" (with even a few specific instances of "transformers").

Price & Strategy

Quite a few press reports mention that the demo device and apps they got to try did not have all Vision Pro features ready, and quite a bit of final polishing is probably done before the first US release, which is months away. Also interesting is that none of the reporters that got to try the Vision Pro were allowed to take pictures of them wearing the device (the exception being Good Morning America. Similarly, Tim Cook was not shown wearing the device, in contrast to Meta's Mark Zuckerberg, who has publicly worn many of Meta's headsets over the years when introduced.

Apparently, according to reviewers, what Apple has created is "best in class" in many respects compared to existing XR headsets. And for a price tag of US$3,500,- it better be...

For that cost you can buy seven of the upcoming Meta Quest 3 at US$500,- each, which is expected to be available in the fall of 2023 and can at least do some of the things the Vision Pro can (just like the Quest Pro). The current workhorse for VR in education and research, the Quest 2, together with the comparable Pico 4, had its price was lowered to US$300 recently. This makes that headset even more attractive for situations where it is good enough for the intended workload. And the Vision Pro does not immediately appear to have features that are vastly more important for use cases in education and research, making hard to justify spending all that money, even if you already could.

But then again Apple is almost certainly not targeting the average consumer (or education and research) with the Vision Pro. It is the first XR headset they release and they are testing the waters to see how the product gets accepted, mostly by early adopters willing to pay the high price and developers keen on making first inroads with Apple's version of spatial computing. The "Pro" in the name signals this, and we can probably expect cheaper "lite" editions in 1-2 years, after user experience has helped sharpen its design and determine which features are most important.

Also, what Apple is initially focusing on with this device is productivity, media and entertainment. Disney's CEO Bob Iger even took the stage during WWDC to announce new Disney content in 3D specifically for the Vision Pro, again highlighting the entertainment angle. For more mundane content the Vision Pro will allow viewing your existing photos and video collection (or any other visual content) by showing it hovering in front of you, say in your living room, in any displayed size you want. It's very much like the Photos app on your iPhone, but with the user interface displayed virtually in the headset for you to see.

Productivity

For productivity and work you'll be able to use many of the standard iOS apps on the Vision Pro, "seeing your favorite apps live right in front of you". Only some integrations between different Apple devices and the Visio Pro were shown, but being able to look at your MacBook screen and then have your laptop desktop appear as a virtual screen in the headset (while still working on the MacBook) is pretty cool and seems easy to use. That type of integration is something, of course, that Apple is really good at and having improved ease-of-use of XR devices is definitely needed.

Video-conferencing using the Vision Pro is certainly one of the interesting use cases targeted. When you first set up your device you make a 3D face scan of yourself. This is used as your digital "persona", and is a 3D realistic representation of your face and upper body, used in Facetime for example:

And when Facetiming with multiple people, all of their virtual persona will be placed side by side, with audio coming from the correct direction. Using eye tracking the persona can show a realistic gaze direction. All of this isn't new (Meta Horizon Workrooms has similar features when used on a Quest Pro), but while Meta uses a cartoony avatar, Apple has opted for realism. Interestingly, Facebook has been working on similar realistic face scans, called Codec Avatar 2.0.

One clever feature that Apple has created for apps that need a video webcam feed, say Zoom, is an "avatar webcam", which provides a real-time digital rendering of your persona. This means that existing video-conferencing apps should work out-of-the-box when you're wearing the Vision Pro when using them. The other participants in the video call will see your digital persona, which might sound weird at first, but even Microsoft is starting to provide avatars in Teams.

What is really interesting with all of this is that Apple has managed (if we can trust the reviews) to make a device and operating system that is easy and intuitive to use, especially for productivity and entertainment. That certainly can't be said for most other XR headsets, and hopefully competition from Apple spurs those vendors to improve their game in this area. However, it has been very silent on the Microsoft and Meta partnership in this area, after it was announced in October 2022.

3D Photos & Videos

There's a click button on top of the Vision Pro for taking (3D) photos and videos. By leveraging the built-in 3D cameras the Vision Pro headset can make

stereoscopic recordings, allowing you to relive memories, as it where, by playing them back in a life-like way. This isn't a fully realistic 3D presentation where you can move all around, but it should definitely be more impressive than a 2D photo. Although it sounds (and looks) a bit creepy to be wearing a Vision Pro while 3D recording your children, as shown in the launch video. And it was something which quite a few people commented on on social media. It also has interesting privacy-related aspects when you start to record other people and places in 3D.

However, being able to easily "scan" a location in 3D might be very useful for 3D conservation and reproduction (think digital heritage or virtual field trips). And during scanning the headset could even provide visual feedback to the wearer on the progress of the scan and show the recorded 3D results, similar to RoomPlan already available on newer iPhones.

Interaction

One very interesting design decision with the Vision Pro is the combined use of eye and hand tracking as the core interaction method, without relying on separate tracked controllers. The Vision Pro contains extra cameras pointing downwards, something that no other headset has. The effect is that you can use hand gestures while, say, resting your hands on your lap. Other headsets use a "laser pointer" approach, with rays emanating from a user's hands. This implies you have to hold your hands fairly high in view of the forward-facing cameras, which is less relaxed for your arms, especially over longer periods.

All of this can be avoided on the Vision Pro. Together with clever use of eye tracking to detect a user's focus and intention Apple seems to have nailed the interaction, which reviewers claim is very intuitive and natural.

One does wonder how much "mind-reading" AI-based technology is actually involved, judging by details shared by an ex-Apple engineer on some of the neuro-technology research they worked on and that might have landed in the Vision Pro:

One of the coolest results involved predicting a user was going to click on something before they actually did. That was a ton of work and something I'm proud of. Your pupil reacts before you click in part because you expect something will happen after you click. So you can create biofeedback with a user's brain by monitoring their eye behavior, and redesigning the UI in real time to create more of this anticipatory pupil response.

Again, having Apple break new ground with easy-to-use intuitive eye and hand control might push other XR vendors to start working on more hand-oriented interaction. The use of tracked controllers will not go away, but Meta adding more and more hand interaction options on the Quest range at least moves away from having to use controllers. After all, the Microsoft HoloLens 2 also managed without.

If Apple's view on spatial computing becomes popular and we start to see users working full days wearing a passthrough headset, such as the Vision Pro, then the long-term effects on the user could be interesting. It seems there is not much research yet, but possible side-effects could be eye strain, changes in perception and cognition, or changes in your relation to reality. Without the headset on you might miss a lot of the 3D features and interactions you've become used to and perhaps feel similar to not having your mobile phone at hand (but worse). Hopefully, some more fundamental research will be done on these effects.

Gaming

Gaming made a quick appearance, but was definitely not a main usage promoted at this point. One reason for this might be the fundamental choice of using hand and eye tracking for interacting with virtual content. Hand tracking is harder to use with games, as game interaction can be quite dynamic, which is why most headsets use dedicated tracked controllers. But maybe Apple has some tricks up its sleeve to provide enough controller-like input using only hand tracking.

A slight different type of application, to become available later, will be Rec Room. This is a fairly popular virtual 3D social space, allowing you to meet or play games in VR. Such a use case is definitely not your typical Apple showcase, but is closest to the idea of "metaverse" or gaming shown.

So for now it seems Meta and PCVR vendors, such as HTC, are fairly safe when it comes to gaming market share.

Privacy

With XR devices containing more and more sensors privacy becomes more of an issue. Which data is gathered? Where does it get stored or sent? Who has access to it? What about the legal aspects? Not unexpectedly Apple is clearly making privacy a fundamental feature for Vision Pro, given its privacy focus in all its products.

First, an iris scan is used to unlock the Vision Pro, in a feature called Optic ID, allowing only a single user to access the device (and its data). For XR headsets this is not entirely new, as the Microsoft HoloLens 2 already had a similar feature. Gathering iris data is obviously very privacy-sensitive, but Apple claims iris data never leaves the Vision Pro and is stored encrypted. It is unclear whether Optic ID easily allows the Vision Pro device to be used by multiple users. Maybe the device only supports a single user, similar to an iPhone. If so, that would be disappointing, as other headsets definitely allow multiple accounts and users.

Secondly, even though the Vision Pro contains many cameras and other sensors, Apple claims that the data coming from those are processed at the system level and individual apps never get access. This is not completely surprising as it works the same in most other XR headsets, but apparently they clearly want to make this point. It is true that some other devices do allow direct camera access, such as HoloLens 2 in Research Mode and the upcoming Lynx R1, but allowing this is more the exception. Obviously, not giving third-party applications access to raw sensor data limits what those apps can do, which is somewhat of a loss. But the privacy implications are probably a step too far at this point for Apple.

Finally, regarding eye tracking we quote https://www.apple.com/apple-vision-pro/, as the information is a bit sparse and hard to interpret:

Eye input is not shared with Apple, third-party apps, or websites. Only your final selections are transmitted when you tap your fingers together.

There are multiple aspects to this that cause some thought. First, apparently only "final selections" based on specific hand interaction lead to eye data being sent. But does that mean that you can only interact by tapping with your fingers while looking at one specific position (say a button), or would it also be possible for an app to ask you to (say) outline a selection of objects using your eyes? The latter can be a useful eye interaction method, but it's not clear if this is supported. Maybe Apple doesn't think that all types of eye interaction is useful or natural (and they might be right), and so only support a limited set. Or maybe it gets in the way of privacy principles regarding eye tracking data.

And this brings us to the second point: if eye tracking data isn't available to normal apps then that limits the research potential of the device in this area. Other devices, such as the HoloLens 2 and Quest Pro (and the upcoming Lynx R1 with eye tracking extension) can be used for research needing eye tracking input. Of course, the privacy aspects of this are to be considered, but Apple making the decision for you is not ideal.

Current EU draft legislation related to AI and biometric data are also interesting developments to keep track of. If this becomes law there might be impact on current XR devices in general that are using biometric data gathering (and most likely using AI), such as iris scanning and face and eye tracking. Also, research applications in general could be affected. For example, "emotion recognition systems in [...] workplace, and educational institutions" could be banned.

Development

Since WWDC is a developer conference there are quite a few introductory-level presentations on developing for the Vision Pro.

Using Apple's existing native SwiftUI and RealityKit frameworks interesting

spatial applications can be built for the Vision Pro. Interactions based on hand gestures or eye interaction are available, but as noted earlier direct access to eye tracking data is not available to an app. A "privacy-preserving" layer is used to provide apps with only a very high-level look-and-tap interaction, and is the only way in which an app can react to where a user is looking. One interesting choice when it comes to shared space applications is that an apps volume is limited to a specific world-size. Any 3D content the app displays will always stay within this volume (and get clipped if it's too large). This prohibits apps from taking up all 3D space, possibly interfering with other apps running at the same time. The Vision Pro OS therefore manages the virtual surroundings and that's probably a good thing.

The scale of immersiveness mentioned earlier is reflected in the different programming options, including controls over how elements from reality, such as the passthrough camera image and hands, are shown in an immersive app. Support for group immersive spaces, where multiple Vision Pro user shared the same immersive space, seems to be available from the start.

A really nice WWDC presentation is "Design for spatial input", which gives design guidelines and best practices for eye and hand interaction in apps developed for Vision Pro. The content shown can even be applied to other XR headsets.

As lots of development in the SURF community is using either Unity or Unreal Engine and there's good news and bad. First the good news.

Support for Unity has been in development over the past 2 years and existing Unity projects can be ported to the Vision Pro with a bit of work. How much work depends on the type of application (fully immersive VR, versus shared space app) and the particular choices made in the project in terms of render pipeline and shader types. Porting instructions are provided by Apple, so that will hopefully help at the time an application needs to be ported.

For shared space apps (with multiple different applications all showing at the same time) rendering needs to be performed with the RealityKit framework and not by Unity itself. To make this easier new Unity PolySpatial tooling is provided that can automatically translate a lot of a Unity project's components and configuration. Not every configuration of Unity project is supported, though.

A feature to "play to device" might be welcome for Unity developers, as it allows testing a Unity application directly on the Vision Pro during developer (or more likely in the device simulator provided by Apple for now). This can help with faster development iterations, as changes in the Unity editor can quickly be checked without having to go through a full deployment step. This only works for shared space applications, though, not for fully immersive VR applications.

Finally, you can apply to a beta program for getting started with Unity PolySpatial to develop for visionOS.

The bad news mentioned above is that Unreal Engine is not supported at all at this point, as far as we can tell. This might have something to do with the lawsuit that Epic Games (developers of Unreal Engine) brought onto Apple, related to app store payment methods. It will be really interesting to see if Unreal Engine support will come at some point.

A positive development is the announced support for WebXR in the Safari browser, but only on the Vision Pro (and not iPhone and iPad) it seems.

Coming back to the point of terminology there specific "Augmented Reality features" were presentted that are available to Unity applications, namely plane detection using ARkit, the world mesh and image markers. Using these features does require extra permissions from the user, though. And somewhat more developer-focused, the traditional 2D flat "window" can now also be volumetric, showing 3D content that can be interacted with.

Other somewhat interesting terminology is the set of application immersion styles available to developers, where an app can be either have a mixed, progressive or full immersion style. Mixed immersion is then merely a few 3D objects overlay on the real world. While progressive immersion means the user sees a fully virtual application in front of them, but to the sides and back reality is still visible ("the bridge between passthrough and a fully immersive experience"). Having such a scale of immersion styles allows the Digital Crown wheel to control the amount of immersion, although the styles can be changed using hand gestures as well. The progressive immersion style isn't something we've seen a lot, although the Lynx R1 solar system demo (ironically the same example used by Apple) had a similar concept.

Given all the positive responses to the Vision Pro launch one Twitterer did warn that soon XR developers will become familiar with "the walled garden of all walled gardens". And that is definitely a point of contention, as Apple is mostly doing its own thing most of the time, not particularly concerned about other platforms and frameworks, and limiting developer control.

Availability and final thoughts

The Vision Pro won't be available until "early next year", and then initially in the US only. The headset even features prominently on the US version of the Apple store webpage, but not in other countries.

In The Netherlands it could take until September 2024, or even later, until we can get the device here. Or maybe it will only become available from the second iteration onwards, skipping Vision Pro 1 entirely. Sony, reportedly, is not willing to increase the production of the Vision Pro displays, which it supplies. Initial yearly supply of the Vision Pro might only be 4-500,000 devices partly due to this restriction (compared to "20 million" Quest 2 headsets sold in 2022).

Given all the aspects above, the high price, and the estimate that the Vision Pro will be too high-end for the average education and research XR workload anyway it might be some time before we see one in the wild within the SURF community. Or at the SURF offices (although we'll try to get one for testing).

However, Apple is really trying to get developers started to develop for Vision Pro, even though the device is not available yet (but an SDK with a simulator might be soon). Interested developers should keep an eye out for possibilities to get a developer kit, or access to one of the Vision Pro "developer labs". These will be set up initially in 6 cities, but unfortunately none in The Netherlands (Munich being the closest).

But if you want to experiment and/or get development experience with a feature set similar to Apple Vision Pro then the *Meta* Quest Pro would actually not be a bad option. It is available right now for 1/3rd of the price of the Vision Pro (it got its price reduced recently, just like Quest 2). It features hand, eye and face tracking, provides camera passthrough and good visual quality using pancake lenses. Together with support for the Unity game engine (and even WebXR) it can help prepare for virtual and mixed reality workloads that could run on Apple Vision Pro in the future. Sure, you won't get the slick hand-eye interaction Apple built (although you can try to mimic it yourself), nor the integration with iOS apps, nor the reverse passthrough display, nor the other Apple feel, nor ...

This also why Apple stepping into the XR market is such a big deal and will hopefully spur more competition from (at least) Meta in the coming years. Especially when it comes to software and usability the latter has a lot to learn from Apple.

Looking a bit further out the expectation is still that Apple and other vendors will continue to develop "AR glasses". These would have a more acceptable glasses-like form-factor, instead of the current bulky headsets that fully close you off from the real world. These would not aim for fully immersive realities, but focus more on mixed reality usage. The Vision Pro might very well be a stepping stone towards that goal.

All in all, the world of XR just got a lot more interesting, thanks to Apple!

Any thoughts or reactions? Leave them below!

Further reading

- Hands-on reports from TechCrunch, RoadToVR and Upload VR

- A 30-minute video summary by Norm from Tested with his impressions and thoughts.

- Ben Lang, of Road to VR fame, and one of the reviewers that actually got to try the Vision Pro, did a Reddit ask-me-anthying

- An opinionated and in-depth technical look at the Apple Vision Pro hardware, compared to the Meta Quest Pro, by Karl Guttag.

0 Praat mee