Dit artikel is ook beschikbaar in het Nederlands

Psychological advice

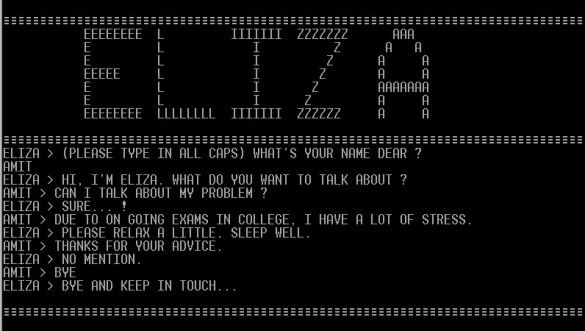

The first computer program to use a very basic form of a language model was Eliza. It was developed more than half a century ago, in 1966, and acted like a chatbot giving "psychological advice" to the user.

Eliza was the first program to apply natural language processing (NLP). The language model underlying Eliza uses pattern recognition based on statistics to generate responses. Although it's a simple model, Eliza could identify keywords from the user input and match a pre-programmed answer.

Chatbots

Today, we use applications daily that work with - fortunately a lot more sophisticated - language models. Whether you are shopping online or checking your balance, chatbots are ready to answer all your questions. Also think of using Google translate to convert your mails to the desired language or letting your phone auto-complete your sentence while you type. Of course, these translations and answers in practice are not yet perfect, but they have come a long way since Eliza. Let's review how far they have come.

What can language models do?

What tasks can language models perform that make our lives easier? One important function is classifying text. By interpreting texts from customers, the program can determine whether a text is positive, neutral or negative in tone. Such sentiment analysis is used to automatically create movie scores on IMDB or product reviews on Amazon, based on how the users write their comments.

Machine translation is another well-known application of language modeling and is advancing rapidly. Whereas up to about five years ago a translation done by Google was still synonymous with "very clunky incoherent piece of text", in recent years these neural machine translations have become very accurate. DeepL is another example with very good state-of-the-art translation capabilities with new languages being added every year.

Other examples include automatic summarization to extract the most important information from a given text and question-answering which forms the basis of the modern chatbot.

In almost all the tasks mentioned above, the language model generates text using a language model. To formulate a proper answer or summary to the user, the model interprets the given prompt and produces novel sentences. While previous language models were already generating reasonable paragraphs, this is where ChatGPT excels in. ChatGPT allows for extremely plausible and coherent text generation as it was trained on an immense set of data. By training a variant of GPT-3 and extending the training in an interactive chatbot-like environment, ChatGPT3 effectively uses user inputs from before to formulate new sentences.

A next and trendy step in using language modeling is to combine text and images. Recently, OpenAI announced DALL-E was introduced, a model which takes text as input (a prompt) and synthesizes a novel image (see below).

The evolution of language models

We have now seen what language models can do, with ChatGPT's performance as a preliminary pinnacle (although there is something to be said for that, about which more later). But how do language models actually work?

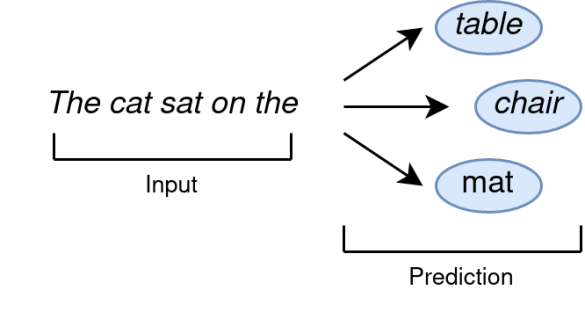

The basis for the first language models was (and still is!) predicting the next word in a given sentence. The language model kept a "dictionary", which, for a large number of word combinations, indicates how often they occur in the text the model was exposed to. After each word, the computer recalculates what statistically the next word should be and this word would be the most likely to follow the last word. You can see how this works in basic terms in the image below, where the sentence "The cat sat on the mat" is formed:

These purely statistical models can only generate sentences based on already known word combinations, so they are unlikely to create new word combinations themselves.

Recurrent Neural Networks

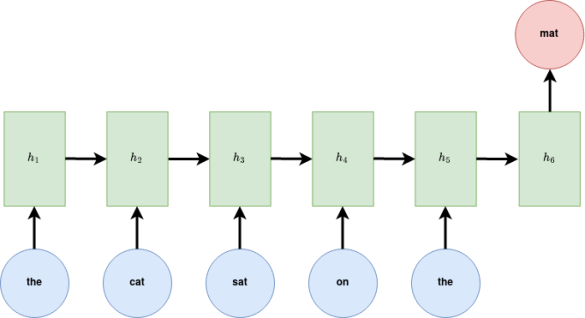

Later came the first neural networks, which are more powerful than the traditional statistical language models, and above all: they can learn patterns from the data. The objective for the network remained the same: predict the next word given an input sentence. The type of neural networks used commonly for language are recurrent neural networks, which means they parse a given text and re-use what the network learns for predicting the next word. At the core is a set of trainable and recurrent parameters which enables the model to generate new word combinations that were observed in the data.

The recurrent neural networks have advanced language modeling but are limited in their sequential nature: a found word is added to the result, and this new result is run through the model again, and so on. As a result, words at the beginning of the sentence have less influence on a newly added word than words further down the sentence. In the example above: the word sat will have more influence on the choice of the word mat than the word cat, because cat occurs earlier in the sentence.

Transformers

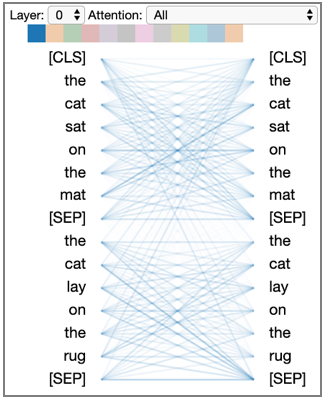

Since 2017, a new type of model architecture, the so-called Transformer, is on the rise. This new architecture allows the model to become very large and powerful, that is why they are referred as large language models (LLM), like ChatGPT. Transformers can parse an input sentence in parallel: they can process all the words in a sentence simultaneously, instead of word by word, as the sequential models. As a result, Transformers are much faster and can process more data.

Transformers work with the concept of attention: for each word in the input, the model learns how it is related to every word in the input. They look at the entire preceding sentence when determining the next word, while sequential language models perform weighting: the further a word is towards the end of the sentence, the more a word weighs in determining the next word. Such long-term dependencies, or relationships between words over greater distances in the sentence, are better modeled by the Transformers when determining the next word. Keeping track of prior words is essential in text generation as the overall structure and meaning of the text should be coherent.

Billions of parameters

As computers have also become more powerful, language models today have hundreds of billions of parameters which they can use to generate new text. In just two years, between 2018 and 2020, the state-of-the-art language models have become more than 500(!) times larger. Combine this with an almost infinite amount of (internet) data available to train the network on and this explains why models can therefore do more and more, with the preliminary highlight being the amazing results shown by ChatGPT.

Risks

The fact that the current generation of language models can do so much is of course very impressive but this has a downside: the models are so complex that we have to think of them as black boxes for the time being. We have only limited insight into exactly how models arrive at their output.

That entails a number of risks, which we already have seen in practice. One of such risks is the bias of the model towards race, gender, age, etc. Language models are trained with texts from the internet and those texts are often not neutral. Looking at how a trained model views gender at specific jobs, the model seems to have prejudices against words like developer or chief. Tay, a chatbot trained to interact with Twitter users, sparked controversy after tweeting offensive and anti-Semitic content, caused by real Twitter users which submitted their offensive language as training data.

Tay is also an example of misuse of language models. The increasing likelihood of misuse cases in academic and educational setting is also a hot topic. Texts generated by language models are hardly distinguishable anymore from texts written by humans. Of course, this makes it tempting to let the computer do the work when writing theses or articles (How do you know we didn't use ChatGPT to write this article? We didn't!). Or to use ChatGPT when students are doing a take-home exam. How to take this into account when designing homework assessments? What about when looking for genuine information online? How do you arm yourself against that as a teacher?

A different issue is the correctness of texts generated by language models. Language models can be programmed to produce grammatically correct texts, but they are trained with texts that have been written before are on the internet (mostly from internet sources). And what is on the internet is not necessarily true. So, there is always a risk that a language model will generate a content-incorrect text. We need to remain mindful of that, even when ChatGPT looks very assertive. A famous example is where ChatGPT answers "peregrine falcon" to the question "what is the fastest marine mammal".

The near future of language models

In recent years, models have become increasingly complex and a lot of money has been spent on the computing power to make them do their job. In the coming years, the focus will stay on finding the maximum capability of these relatively new Transformers. There will also be research on doing this with huge data of high quality and in a energy-saving way. After all, to properly train the current next generation of models, more and more data will be needed. Models today have billions of parameters and it is a challenge to provide all those parameters with sufficient (domain-specific) training data. Additionally, because of their sheer size, training and hosting of such models is limited to the biggest corporations like Google and Meta, which gives them a lot of control.

Language models are now mainly used to generate text, but we already see them proving their value in other domains as well: for instance, by using not only text but also video and speech as input for language models, they can also process non-verbal expressions, such as irony, but also think of sign language. This makes the possibilities of language models even more extensive. Language models can also be “fine-tuned” (i.e., trained further on very specific datasets) in order to make them work better in specific domains, such as combining medical text with medical images, or scanning and interpreting ancient Dutch.

Language models are here to stay

Looking at the developments in AI from the past few years, it is very likely that the state of the art in a few years in the future will continue to surprise. So let's not focus only on our fear that these new models will write better texts than humans, but take a realistic perspective on the potential and the impact. Let's work out how can language models can support us to improve teaching and research. How do we ensure that people and models complement each other, rather than being in competition? That is where greatest opportunities lie.

Learn more

- Read the blog of Duuk Baten (responsible AI advisor) on the impact of ChatGPT in education: https://communities.surf.nl/en/ai-in-education/article/how-big-can-the-…

- Read the blog of Erdinç Saçan on ChatGPT in education by ChatGPT: https://communities.surf.nl/ai-in-education/artikel/dit-is-niet-geschre…

- Want to learn more? Reach us at thomas.vanosch@surf.nl or vivian.vanoijen@surf.nl, or for general AI information at ai-info@surf.nl or visit www.surf.nl/ai.

This blog was made possible by Jan Michielsen and Vivian van Oijen.

0 Praat mee