Duuk Baten

Digitale innovatie voor onderwijs en onderzoek. Nu ben ik binnen SURF bezig… Meer over Duuk Baten

In a rapidly evolving world, artificial intelligence emerges as a transformative force in various research domains. While its potential is undeniable, the challenges it presents can't be overlooked. In a short survey we aimed to gauge the current state of the responsible application of AI in research. Preliminary findings indicate that promising steps are being made, but that significant gaps remain in institutional guidance and support, as well as in the practical implementation of responsible AI practices.

Authors: Matthijs Moed, John Walker, and Duuk Baten

For a few years we have been able to see how machine learning is changing existing research practices, replacing traditional statistics or numerical analysis for deep learning and neural network [1]. The possibilities of AI have also been enabling new research practices and impacted fields like weather simulation, life sciences, and astronomy [2]. However, with this increasing potential of AI technologies also come new challenges. As the European Union puts it, ‘trustworthy AI’ needs to be lawful, ethical, and robust [3]. So how do we guarantee compliance with law and regulation? How can we deal with the impacts of possibly biased models, when applied? How reliable are our models, and can we accurately assess their bias and inaccuracies? How do the ‘black box’ properties of AI models impact the scientific need for explainability and reproducibility?

To get a better insight into the needs and challenges faced by researchers, we launched a short survey on responsible AI in research. Insights which can help us formulate and prioritize activities. To structure the survey, we used two existing frameworks: the European Commission guidelines for trustworthy AI, and the AI ethics maturity framework by the Erasmus School of Philosophy.

Note, our findings are based on a relatively small sample size (n = 21) and our approach to data gathering and analysis was not systematic. Nevertheless, our aim is to offer a 'thermometer' of sorts, to get a sense of where our members are currently at and to identify possible needs. Respondents are a mix of researchers and research supporters as well as managers and are drawn from more than a dozen different institutes, including universities, applied universities and university medical centres.

In 2019 the European Union’s High-Level Expert Group on Artificial Intelligence published its Ethics Guidelines for Trustworthy Artificial Intelligence [3]. According to these guidelines, trustworthy AI should be lawful, ethical, and robust.

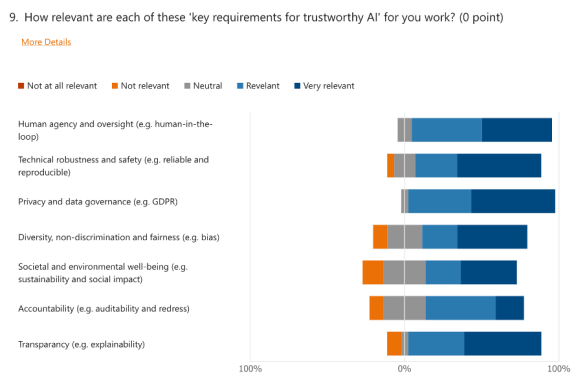

They set out seven key requirements that AI systems should meet in order to be deemed trustworthy:

The requirements are accompanied by a specific assessment list to guide developers and deployers of AI in implementing the requirements in practice [4].

The first finding of our ‘thermometer’ was that most respondents indicate that they have a good understanding of what RAI means in the context of their work and already use RAI methods and approaches. These approaches are primarily about ensuring privacy and security compliance, implementation of data management practices, and usage of fairness and explainability toolkits. Correspondingly, as major risks respondents identify privacy, security, bias and transparency.

There is a broad recognition of the relevance of all key requirements of trustworthy AI as set by the European Commission. Here we see in particular a recognition of the relevance of privacy and data governance, transparency, technical robustness, and human oversight. This suggests our correspondents in general identify responsible AI as relevant to their work.

A third of respondents indicate it is unclear to them what their organisation expects of them when it comes to implementing RAI in practice. Only one respondent received training related to responsible AI, which was mostly focussed on data privacy and research ethics. Lastly, about 1/3rd of the respondents noted that their institution provides guiding policies or frameworks for responsible AI practices. Respondents also regularly mentioned the role of ethics committee for their research practices (if the role of ethics committees in computer science research is of interest to you, consider subscribing to the ethics committee network van IPN).

These results suggest that a more general approach to responsible AI, beyond compliance and legal checks, is not yet widespread. This is echoed by the self-reported maturity levels.

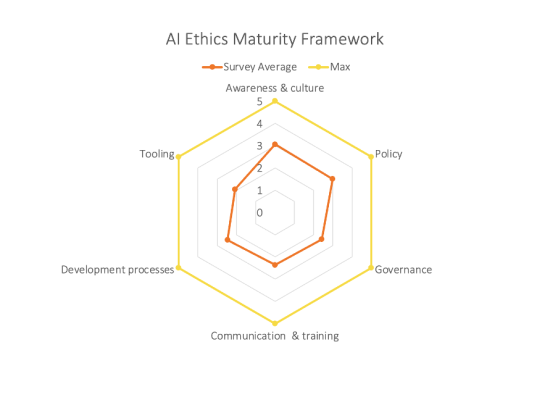

In 2022 researchers from the Erasmus School of Philosophy and School of Management published a model for assessing the maturity of AI ethics in organisations [5]. The authors recognise the need for organisations to go from the ‘what’ of AI ethics to the ‘how’ of governance and operationalisation.

They propose a holistic approach along multiple dimensions:

The level of maturity reached by an organisation is assessed with the following scale:

By assessing their maturity along the six dimensions, organisations can identify gaps and formulate a strategy to advance their AI ethics procedures.

Overall, the reported maturity level varies between ‘repeated’ and ‘defined’, but with significant differences among the five dimensions of awareness & culture, policy, governance, communication & training, development processes and tooling. A significant group responded ‘I don’t know’ to the questions about maturity, which suggests the actual results might be lower.

There is significant awareness of responsible AI practices on the individual and, to some extent, the organisation level. In general, organisational policy has been defined with standardized processes, and mostly is about compliance with privacy and security regulation. Consequently, governance is implemented with legally mandated checks, and is refined and made repeatable by individual teams and projects.

Respondents indicate that communication and training is generally lacking. There are small-scale initiatives for training and communication, with a third of respondents stating there is minimal to no communication at all in their organisation.

As for the more technical implementation of responsible AI practices, there is some use of tooling, and a clear demand for tools and services to investigate bias, and to improve explainability and reproducibility.

Lastly, respondents indicate three main activities for SURF to play a role in: tools and services, (national) policy frameworks or best practices, and community activities and knowledge-sharing.

We recognize SURF can play a role in these activities and developments. However, for this we need your help.

Whether you are interested knowledge sharing about responsible AI, or work on the practical implementation of responsible AI practices, or are looking to develop policy instruments, we would like to collaborate. Please get in touch with duuk.baten@surf.nl.

Digitale innovatie voor onderwijs en onderzoek. Nu ben ik binnen SURF bezig… Meer over Duuk Baten

0 Praat mee