Patrick Hochstenbach

I have worked for over 25 years in academic libraries at Ghent University… Meer over Patrick Hochstenbach

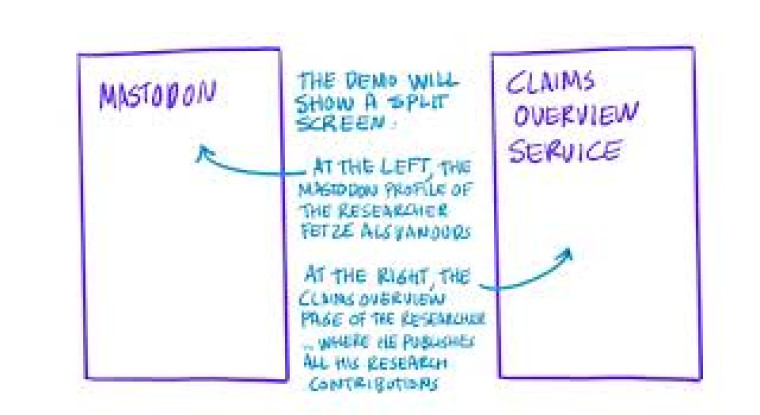

In an experimental collaboration, the SURF Open Science Innovation Team and IMEC-IDLab at Ghent University in Belgium are exploring whether Mastodon can be used to semi-automatically collect information about any type of research contribution. The idea is to ask researchers to do little more than post a 'toot' on Mastodon mentioning such a contribution. Smart agents (named “claimbots”), developed by SURF and IMEC, will monitor these toots and handle the complex task of extracting metadata from such toots and populating research productivity portals.

Mastodon is an open-source alternative to microblogging and social networking, similar to the services provided by tech giants such as Twitter (X Corp), Threads (Meta) and LinkedIn (Microsoft).

In contrast to centralized social networking services (such as Twitter) Mastodon is a decentralized platform composed of dozens of independent communities worldwide that users can join (see for example Academics on Mastodon). Using 'toots’ -- the Mastodon equivalent of Twitter/X 'tweets’ -- researchers can share insights into their academic life, including updates and reflections on the scientific work they are involved with, and research activities, like attending a conference or doing a podcast.

SURF is experimenting with Mastodon as an informal medium for scholarly communication, enabling researchers to promote their work, share aspects of academic life and -- by tracking it, enhance the transparency of the research process.

These ‘toot’ messages are not only interesting from a social viewpoint -- they can also be used to automatically track and catalogue research output and ease some of the administrative burdens that researchers are confronted with.

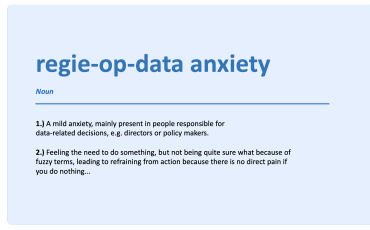

Research assessment plays a significant role in academic life and is a key mechanism for allocating funding and advancing academic careers. Research assessment also creates an additional burden for the researchers, as they must annually compile publication lists and overviews, frequently requiring metadata to be manually entered into database systems.

In addition, the scope of research assessment is evolving: to measure academic excellence not only information about traditional publications (e.g. journal articles and book chapters) should be collected but also many non-traditional sources. This is reflected in the 2020 position paper “Room for everyone’s talent” published by Dutch public knowledge institutions and research funders (VSNU, NFU, KNAW, NWO, and ZonMw), where academic excellence is defined by 'REIL-contributions', representing research (R), education (E), impact (I) and leadership (L).

Research contributions can be found in many places beyond the traditional publications like journal articles and books. New types of research contributions such as experiments, software and datasets have become an integral part of publication lists.

Researchers also share knowledge as educators, delivering lectures, preparing course materials, and even creating blog posts or videos to make their expertise accessible to a wider audience.

Furthermore, research findings can also be featured in non-academic media such as newspaper articles, television news segments, interviews, and documentaries. Researchers can also fulfill professional roles as organizers of workshops and conferences, journal editors, and advisors to political bodies and businesses. Collectively, all these contributions significantly enhance a researcher's academic value and are classified as REIL contributions.

To a large extent, all information about REIL contributions can be found on the web or at least leave a trace on the web. Open-source software contributions can be found in source code archives, datasets in data spaces, lectures often have a homepage, media appearances can be discovered on news sites, and even leadership roles are advertised on the web pages of many conferences and workshops. Such a web traces provide an input for generating researcher contribution lists.

To collect REIL activities, researchers could be asked to report on all their contributions, much like they do for traditional publications. This would involve using a research productivity portal to input metadata for various activity types. The concern is that if current cataloguing tasks are already perceived as an administrative burden, expanding these requirements to include many more activities will only increase that burden, raising the question if this information about individual REIL activities could not be downloaded from the web in some way?

The answer is yes and no. While traditional publications benefit from dedicated scholarly portals like Web of Science, Google Scholar, Scopus, and OpenAlex that already provide useful REIL metadata, the same isn't true for non-traditional REIL activities. It's extremely difficult to gather information about source code, lectures, or media appearances by academics from the open web. Commonly used aggregation techniques such as identifying researchers and research artifacts based on persistent identifiers (PIDs) such as DOIs and ORCIDs do not exist.

Without a way to differentiate researchers' REIL contributions from the general web content, a web search for "Dutch research output" will be ineffective.

In 2023 SURF launched a Mastodon pilot which can be used by students, researchers and staff of Dutch research institutions. For the current project, we are experimenting with alternative strategies to aggregate information about REIL claims and are exploring Mastodon as an informal communication platform to do so. Our hypothesis is that if researchers use a social networking platform like Mastodon to discuss their REIL activities, the discovery of REIL activities could become as simple as listening to informal conversations, such as researcher Carol telling her colleague:

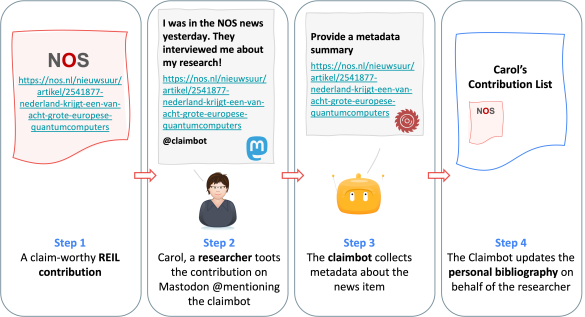

“I was in the NOS news yesterday! They interviewed me about my research https://nos.nl/nieuwsuur/artikel/2541877-nederland-krijgt-een-van-acht-grote-europese-quantumcomputers"

If such a post was “tooted” on Mastodon, with the experimental claim service we have developed, we can in principle identify the researcher who posted the message (in this case Carol). From the toot, we can also read the URL that links the news article at the NOS news agency. Based on this URL we can use smart technologies to extract descriptive metadata about this news article such as the title, the author, the date of publication, type of web page. This information would be sufficient for many researcher assessment workflows. The only missing link is an indicator that this URL qualifies as a REIL activity which the researcher would like to contribute as an academic activity (and not some other type of informal topic).

One of the key elements of our approach involves the researcher tagging their Mastodon posts as REIL claims. When a researcher wants to document a REIL contribution, in addition to tooting a message to their followers, they also address their toot to a 'claimbot' account to claim a REIL activity, like this:

"I was in the NOS news yesterday. They interviewed me about my research https://nos.nl/nieuwsuur/artikel/2541877-nederland-krijgt-een-van-acht-… @claimbot"

The simple @claimbot addition to the toot, triggers a claimbot in our experimental network*. The claimbot reads the toot and interprets the URL as a REIL activity the researcher wants to claim. The claimbot uses smart technologies to extract metadata from the referenced web page, transforms this information into machine-readable linked data format and updates a personal REIL contribution list of the researcher.

In our experiment, we are using a personal Wiki page that the researcher can maintain. The linked data and the Wiki can then be integrated in workflows and research information systems for research assessment purposes.

*Note: this is an early prototype, adding @claimbot to Mastodon 'toots' does not yet trigger the claimbot for accounts outside of the experiment.

In 2024, SURF ran a simulation-test using a database of Mastodon posts from hundreds of academics. This experiment demonstrated that we could automatically generate metadata for traditional and non-traditional REIL activities 91% of the time.

91% of the effort to collect researcher assessment information can be automated.

In a large majority of cases the extracted information provided is sufficient to populate researcher assessment databases. This simulation-test demonstrated the capabilities of the Mastodon service, our claim-robots, and smart data extraction mechanisms.

As a next step, in Q3-4 of 2025, we plan to work with researchers from universities and universities of applied sciences to review our experimental setup and to collect qualitative feedback about our model, this in addition to the quantitative results from our 2024 experiment. These results will provide us the required feedback to move from an experimental environment to a pilot that can be tested by the broader SURF community.

Would you like to receive more information or want to be involved? Please leave a comment below, or contact us:

Or, reach out on Mastodon: Patrick https://openbiblio.social/@hochstenbach and Thomas https://social.edu.nl/@thomas

If you don't have Mastodon yet, please consider creating a free account on the SURF Mastodon and start tooting and following your colleagues!

This work is done in collaboration with Laurents Sesink (SURF Open Science Lead) and Herbert Van de Sompel (Honorary Fellow DANS)

I have worked for over 25 years in academic libraries at Ghent University… Meer over Patrick Hochstenbach

0 Praat mee