Ruud Steltenpool

Physicist, information analyst, certified Carpentries Instructor. Supports… Meer over Ruud Steltenpool

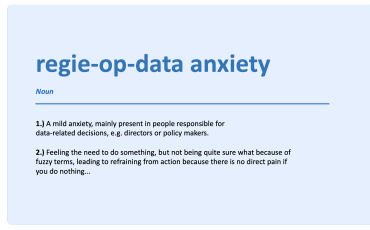

When people say "make data FAIR" I wonder whether they mean doing extra paperwork after the fact, or changing how data are created so they’re FAIR from the start — #bornFAIR. Ideally, adding metadata should feel like a tiny, natural part of researchers’ normal workflows and should give them immediate benefits. We should be thinking about FAIR across the whole organization, not just at the end, making it much easier to work with and implement. I have a number of potential ideas that can make the FAIR process better for researchers and research-support and I would like to share them here and invite anyone to comment or reach out to see if we can collaborate.

I say researchers (plural) and workflows (plural), because science is a multi-disciplinary team undertaking, even if you look only at one research group and its support. Strangely enough it sometimes seems as if the complex information system that is a human/researcher, is limited to a brain at a screen overloading colleagues with messages. This makes it hard to go through all phases of work up to deep work, similar to how a few 'well-timed' short interruptions of your sleep can actually make you very tired.

Wasn't a lot of the promise of FAIR data to 'automate away' much of the 'hassle' frustrating our VALUABLE EXPERTISE FOCUS?

From the point of view of someone who describes his role as working on the abstraction-rich human cooperation spectrum ranging from value, meaning, standardization and notation at one side and interaction design at the other, with information expertise (often involving technology) in between to connect it all … I have some thoughts and suggestions to seriously try (and can use a hand at).

We will never all speak exactly the same language and jargon while meaning the same, but there's plenty of room for improving the overlap, also in our data and digital tooling. And I claim that to improve research also the non-research-specific support departments of the wider organisations should change their habits towards FAIR.

Current hierarchies and what is being measured within them is still surprisingly much based on what the US brought in after World War 2, when information was mostly a logistics of paper and there were few highly educated 'knowledge workers', let alone those with the internet in their back pocket. How much of that still makes sense to disturb workers for, while on their way to deep work? Any organisational layers, meetings, or form-like administration that costs more time and frustration than it helps? Do Total-Cost-of-Ownership calculations include the 'end'-users' time and take into account knowledge about the high cost of task switching? At the same time lowering complexity (also by law makers) and raising transparency of data models can help consistency.

More than a few employees are amazed how much time they spend not doing their primary work, because of a list of software nobody seems to like, let alone understand how they relate. Widely known for exposing the best disguised bullshit in technology and education, professor Felienne Hermans wrote on this bureaucracy. Others recognize some of CIA's manual of small sabotage in management policy :-)

But seriously, some suggestions for change:

I hope you found some inspiration.

Build focus together?

Physicist, information analyst, certified Carpentries Instructor. Supports… Meer over Ruud Steltenpool

0 Praat mee